Lifecycle Phases

Secure interaction between a rollup and EigenDA is composed of three distinct system flows:

- Dispersal: Submitting payload data to the DA network

- Retrieval: Fetching payload data from the DA network

- Verification: Ensuring the integrity and quorum-based certification of data availability. Where and how verification is performed is often contingent on how an integration is implemented; e.g:

- Pessimistic Verification where a

DACertis checked as pre-inclusion check for a sequencer inbox - Optimistic Verification where a

DACertis only verified in a worst-case challenge

- Pessimistic Verification where a

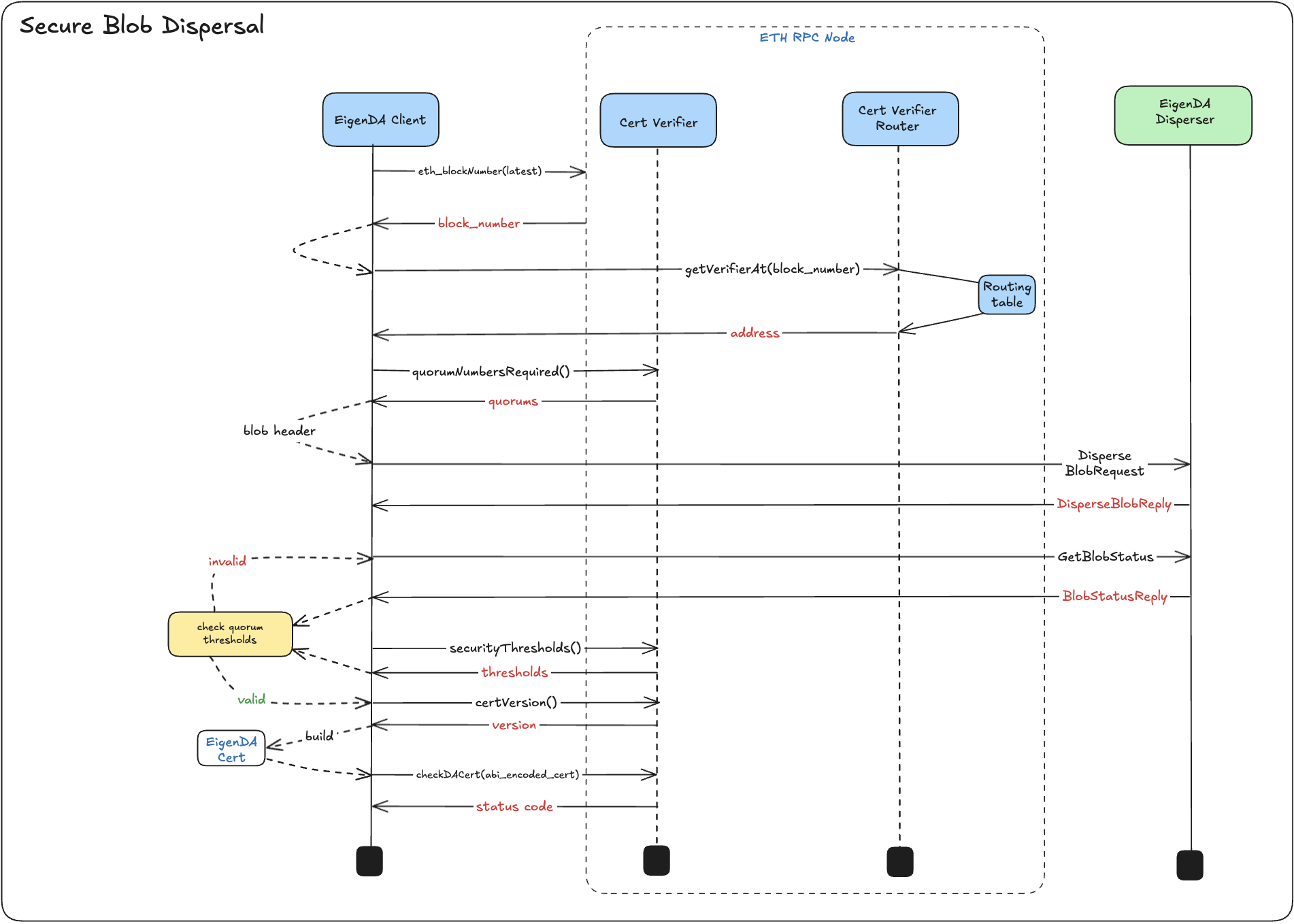

Secure Dispersal

Diagram

System Flow

-

EigenDA Client takes a raw payload bytes and converts it into a blob.

-

Using

latest_block_number(lbn) number fetched from ETH RPC node, EigenDA Client calls the router to get theEigenDACertVerifiercontract address most likely (if usingEigenDACertVerifierRouter) to be committed to by thereference_block_number(rbn) returned by the EigenDA disperser. -

Using the

verifier, EigenDA Client fetches therequired_quorumsand embeds them into theBlobHeaderas part of the disperser request. -

The EigenDA Client submits the payload blob request to the EigenDA disperser via

DisperseBlobendpoint and polls for aBlobStatusReply(BSR). -

While querying the disperser's

GetBlobStatusendpoint, EigenDA Client periodically checks against the confirmation threshold as it’s updated in real-time by the disperser using the rbn returned in theBlobStatusReplyfor fetching thresholds. (ref) -

Once confirmation thresholds are fulfilled, EigenDA Client calls the

verifier'scertVersion()method to get thecert_versionand casts theDACertinto a structured ABI binding type using thecert_versionto dictate which certificate representation to use. (ref) -

EigenDA Client then passes ABI encoded cert bytes via a call to the

verifier'scheckDACertfunction which performs onchain cert verification logic and returns a uintverification_status_code -

Using the

verification_status_code, the EigenDA Client determines whether to:- Return the certificate (i.e.,

CertV2Lib.StatusCode.SUCCESS) to the Rollup Batcher, or - Failover if any other status code is returned.

- Return the certificate (i.e.,

Payload to Blob Encoding

This phase occurs inside the eigenda-proxy, because the proxy acts as the “bridge” between the Rollup Domain and Data Availability Domain (see lifecycle diagram).

A payload consists of an arbitrary byte array. The DisperseBlob endpoint accepts a blob, which needs to be an encoded bn254 field element array.

Disperser polling

The DisperseBlob method takes a blob and blob_header as input. The hash of the blob_header (known as the blobKey) serves as a unique identifier for tracking the dispersal status. Under the hood, the disperser performs the following steps:

- Batching: The blob is aggregated into a Merkle tree along with other blobs.

- Reed-Solomon Encoding: The blob is erasure-coded into chunks for fault tolerance.

- Dispersal to Validators: The chunks are distributed to EigenDA validator nodes based on the required quorum settings.

- Signature Collection: The disperser collects BLS signatures from participating validators.

- Status Reporting: A

BlobStatusReplyis returned to the client to reflect progress or terminal status.

The disperser batches blobs for a few seconds before dispersing them to nodes, so an entire dispersal process can exceed 10 seconds. For this reason, the API has been designed asynchronously with 2 relevant methods.

// Async call which queues up the blob for processing and immediately returns.

rpc DisperseBlob(DisperseBlobRequest) returns (DisperseBlobReply) {}

// Polled for the blob status updates, until a terminal status is received

rpc GetBlobStatus(BlobStatusRequest) returns (BlobStatusReply) {}

// Intermediate states: QUEUED, ENCODED, GATHERING_SIGNATURES

// Terminal states: UNKNOWN, COMPLETE, FAILED

enum BlobStatus {

UNKNOWN = 0; // functionally equivalent to FAILED but for unknown unknown bugs

QUEUED = 1; // Initial state after a DisperseBlob call returns

ENCODED = 2; // Reed-Solomon encoded into chunks ready to be dispersed to DA Nodes

GATHERING_SIGNATURES = 3; // blob chunks are actively being transmitted to validators

COMPLETE = 4; // blob has been dispersed and attested by DA nodes

FAILED = 5;

}

After a successful DisperseBlob RPC call, the disperser returns BlobStatus.QUEUED. To retrieve a valid BlobStatusResponse, the GetBlobStatus RPC endpoint should be polled until a terminal status is reached.

If BlobStatus.GATHERING_SIGNATURES is returned, the signed_batch and blob_verification_info fields will be present in the BlobStatusReply. These can be used to construct a DACert, which may be verified immediately against the configured threshold parameters stored in the EigenDACertVerifier contract. If the verification passes, the certificate can be accepted early. If verification fails, polling should continue.

Once BlobStatus.COMPLETE is returned, it indicates that the disperser has stopped collecting additional signatures, typically due to reaching a timeout or encountering an issue. While the signed_batch and blob_verification_info fields will be populated and can be used to construct a DACert, the DACert could still be invalid if an insufficient amount of signatures were collected in-regards to the threshold parameters.

Any other terminal status indicates failure, and a new blob dispersal will need to be made.

Failover to Native Rollup DA

Proxy can be configured to retry BlobStatus.UNKNOWN, BlobStatus.FAILED, & BlobStatus.COMPLETE (if threshold check failed) dispersal n times, after which it returns to the rollup a 503 HTTP status code which rollup batchers can use to failover to EthDA or native rollup DA offerings (e.g, arbitrum anytrust).

The Proxy will return a 503 Service Unavailable status code in cases where a dispersal succeeds against the Disperser but verification fails against the EigenDACertVerifier contract (i.e, any status code != SUCCESS).

See here for more info on the OP implementation and here for Arbitrum.

BlobStatusReply → Cert

Implementation Note: While not mandated by the EigenDA spec, clients must currently reconstruct the

DACertfrom fields in theBlobStatusReply, as the disperser does not return a cert directly. The transformation is visualized in the Ultra High Res Diagram.

In the updated implementation, a CertBuilder constructs the DA Cert through direct communication with the OperatorStateRetriever contract, which provides the necessary information about operator stake states. This approach ensures accurate on-chain data for certificate verification. The following pseudocode demonstrates this process:

class DACert:

batch_header: any

blob_verification_proof: any

nonsigner_stake_sigs: any

cert_version: uint8

signedQuorumNumbers: bytes

def get_da_cert(blob_header_hash, operator_state_retriever, cert_version_uint8) -> DACert:

"""

DA Cert construction pseudocode with OperatorStateRetriever

@param blob_header_hash: key used for referencing blob status from disperser

@param operator_state_retriever: ABI contract binding for retrieving operator state data

@param cert_version_uint8: uint8 version of the certificate format to use

@return DACert: EigenDA certificate used by rollup

"""

# Call the disperser for the info needed to construct the cert

blob_status_reply = disperser_client.get_blob_status(blob_header_hash)

# Validate the blob_header received, since it uniquely identifies

# an EigenDA dispersal.

blob_header_hash_from_reply = blob_status_reply.blob_verification_info.blob_certificate.blob_header.Hash()

if blob_header_hash \!= blob_header_hash_from_reply:

throw/raise/panic

# Extract first 2 cert fields from blob status reply

batch_header = blob_status_reply.signed_batch.batch_header

blob_verification_proof = blob_status_reply.blob_verification_info

# Get the reference block number from the batch header

reference_block_number = batch_header.reference_block_number

# Get quorum IDs from the blob header

quorum_numbers = blob_verification_info.blob_certificate.blob_header.quorum_numbers

# Retrieve operator state data directly from the OperatorStateRetriever contract

operator_states = operator_state_retriever.getOperatorState(

reference_block_number,

quorum_numbers,

blob_status_reply.signed_batch.signatures

)

# Construct NonSignerStakesAndSignature using the operator state data

nonsigner_stake_sigs = construct_nonsigner_stakes_and_signature(

operator_states,

blob_status_reply.signed_batch.signatures

)

signed_quorum_numbers = blob_status_reply.signed_batch.quorum_numbers

return DACert(batch_header, blob_verification_proof, nonsigner_stake_sigs, cert_version_uint8, signed_quorum_numbers)

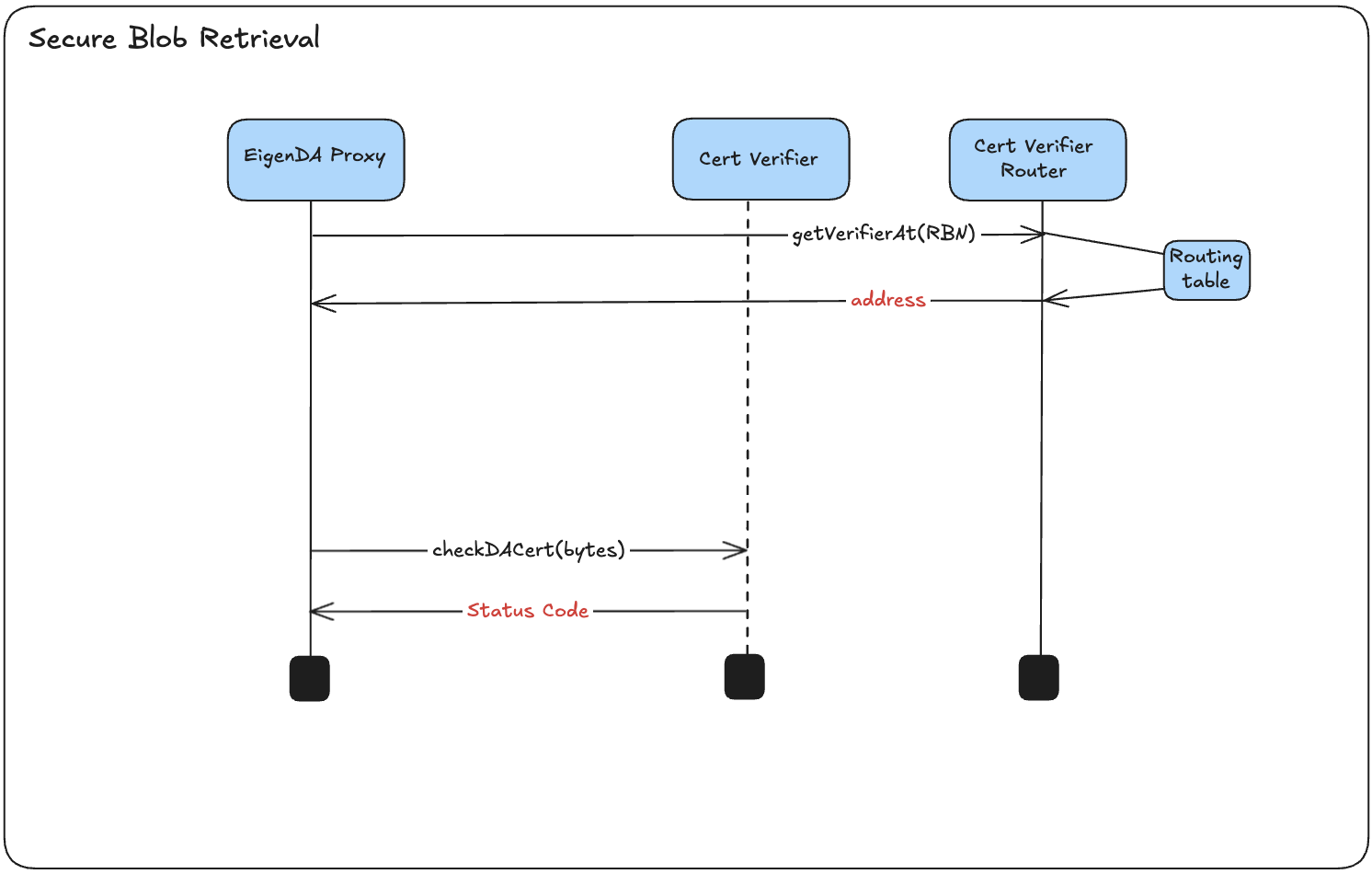

Secure Retrieval

System Diagram

System Flow

-

A Rollup Node queries Proxy’s

/getendpoint to fetch batch contents associated with an encoded DA commitment. -

Proxy decodes the

cert_versionfor the DA commitment and uses an internal mapping ofcert_version⇒cert_abi_structto deserialize into the structured binding cert type. -

Proxy submits ABI encoded cert bytes to

EigenDACertVerifierread call via thecheckDAcertmethod, which returns averification_status_code. -

Proxy interprets the

verification_status_codeto understand how to acknowledge the certificate's validity. If the verification fails, Proxy returns an HTTP 418 I'm a teapot status code, indicating to a secure rollup that it should disregard the certificate and treat it as an empty batch in its derivation pipeline. -

Assuming a valid certificate, Proxy attempts to query EigenDA retrieval paths for the underlying blob contents.

-

Once fetched, Proxy verifies the blob's KZG commitments to ensure tamper resistance (i.e., confirming that what's returned from EigenDA matches what was committed to during dispersal).

-

Proxy decodes the underlying blob into a

payloadtype, which is returned to the Rollup Node.

Retrieval Paths

There are two main blob retrieval paths:

- decentralized retrieval: retrieve erasure coded chunks from Validators and recreate the

blobfrom them. - centralized retrieval: the same Relay API that Validators use to download chunks, can also be used to retrieve full blobs.

EigenDA V2 has a new Relay API for retrieving blobs from the disperser. The GetBlob method takes a blob_key as input, which is the blobKey (also known as blob_header_hash) computed from the BlobHeader. Note that BlobCertificate (different from DACert) contains an array of relay_keys, which are the relays that can serve that specific blob. A relay's URL can be retrieved from the relayKeyToUrl function on the EigenDARelayRegistry.sol contract.

Decoding

Decoding performs the exact reverse operations that Encoding did.