EigenDA

EigenDA is a Data Availability (DA) service, implemented as an actively validated service (AVS) on EigenLayer, that provides secure and scalable DA for L2s on Ethereum.

What is DA?

In informal terms, DA is a guarantee that a given piece of data will be available to anyone who wishes to retrieve it.

A DA system accepts blobs of data (via some interface) and then makes them available to retrievers (through another interface).

Two important aspects of a DA system are

- Security: The security of a DA system constitutes the set of conditions which are sufficient to ensure that all data blobs certified by the system as available are indeed available for honest retrievers to download.

- Throughput: The throughput of a DA system is the rate at which the system is able to accept blobs of data, typically measured in bytes/second.

An EigenLayer AVS for DA

EigenDA is implemented as an actively validated service on EigenLayer, which is a restaking protocol for Ethereum.

Because of this, EigenDA makes use of the EigenLayer state, which is stored on Ethereum, for consensus about the state of operators and as a callback for consensus about the availability of data. This means that EigenDA can be simpler in implementation than many existing DA solutions: EigenDA doesn't need to build it's own chain or consensus protocol; it rides on the back of Ethereum.

A first of its kind, horizontally scalable DA solution

Among extant DA solutions, EigenDA takes an approach to scalability which is unique in that it yields true horizontal scalability: Every additional unit of capacity contributed by an operator can increase the total system capacity.

This property is achieved by using a Reed Solomon erasure encoding scheme to shard the blob data across the DA nodes. While other systems such as Celestia and Danksharding (planned) also make use of Reed Solomon encoding, they do so only for the purpose of supporting certain observability properties of Data Availability Sampling (DAS) by light nodes. On the other hand, all incentivized/full nodes of the system download, store, and serve the full system bandwidth.

Horizontal scalability provides the promise for the technological bottlenecks of DA capacity to continually track demand, which has enormous implications for Layer 2 ecosystems.

Security Model

EigenDA produces a DA attestation which asserts that a given blob or collection of blobs is available. Attestations are anchored to one or more "Quorums," each of which defines a set of EigenLayer stakers which underwrite the security of the attestation. Quorums should be considered as redundant: Each quorum linked to an attestation provides an independent guarantee of availability as if the other quorums did not exist.

Each attestation is characterized by safety and liveness tolerances:

- Liveness tolerance: Conditions under which the system will produce an availability attestation.

- Safety tolerance: Conditions under which an availability attestation implies that data is indeed available.

EigenDA defines two properties of each blob attestation which relate to its liveness and safety tolerance:

- Liveness threshold: The liveness threshold defines the minimum percentage of stake which an attacker must control in order to mount a liveness attack on the system.

- Safety threshold: The safety threshold defines the total percentage of stake which an attacker must control in order to mount a first-order safety attack on the system.

The term "first-order attack" alludes to the fact that exceeding the safety threshold may represent only a contingency rather than an actual safety failure due to the presence of recovery mechanisms that would apply during such a contingency. Discussion of such mechanisms is outside of the scope of the current documentation.

Safety thresholds can translate directly into cryptoeconomic safety properties for quorums consisting of tokens which experience toxicity in the event of publicly observable attacks by a large coalition of token holders. This and other discussions of cryptoeconomic security are also beyond the scope of this technical documentation. We restrict the discussion to illustrating how the protocol preserves the given safety and liveness thresholds.

Glossary

Rollup Batcher

Sequencer rollup node component responsible for constructing and submitting to the settlement chain user transaction batches

Rollup Nodes

Refers to any rollup node (e,g, validator, verifier) which syncs current chain state through an onchain sequencer inbox.

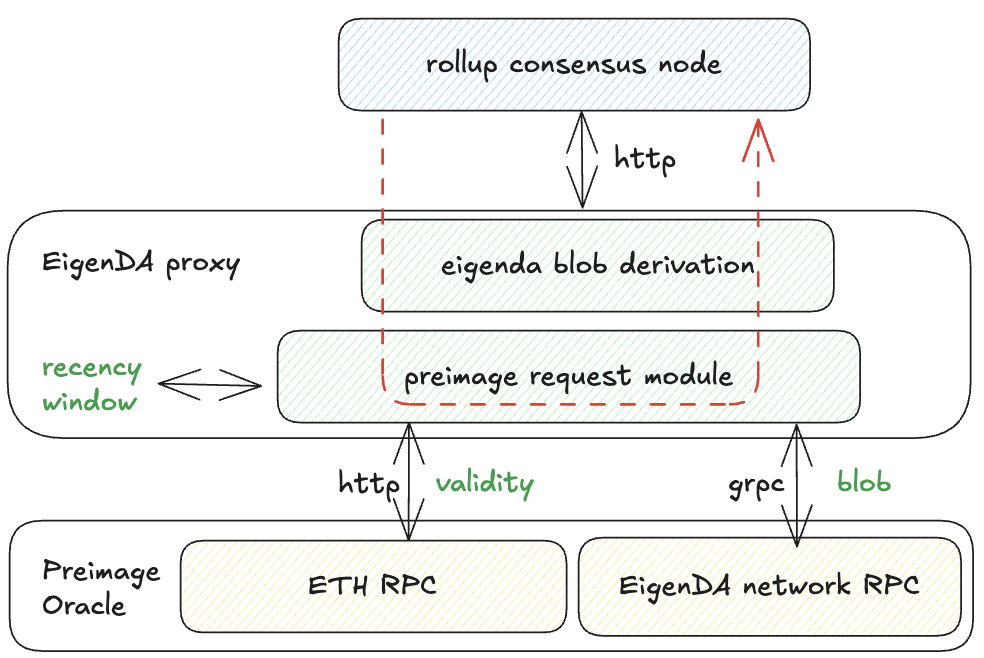

EigenDA Proxy

Side car server as a part of rollup and used for secure and trustless communication with EigenDA.

EigenDA Client

A collection of clients used for securely dispersing and reading EigenDA blobs.

Rollup Payload

Compressed batches of transactions or state diffs.

DA Certificate (DACert)

An EigenDA Certificate (or DACert for short) contains all the information needed to retrieve a blob from the EigenDA network and validate it.

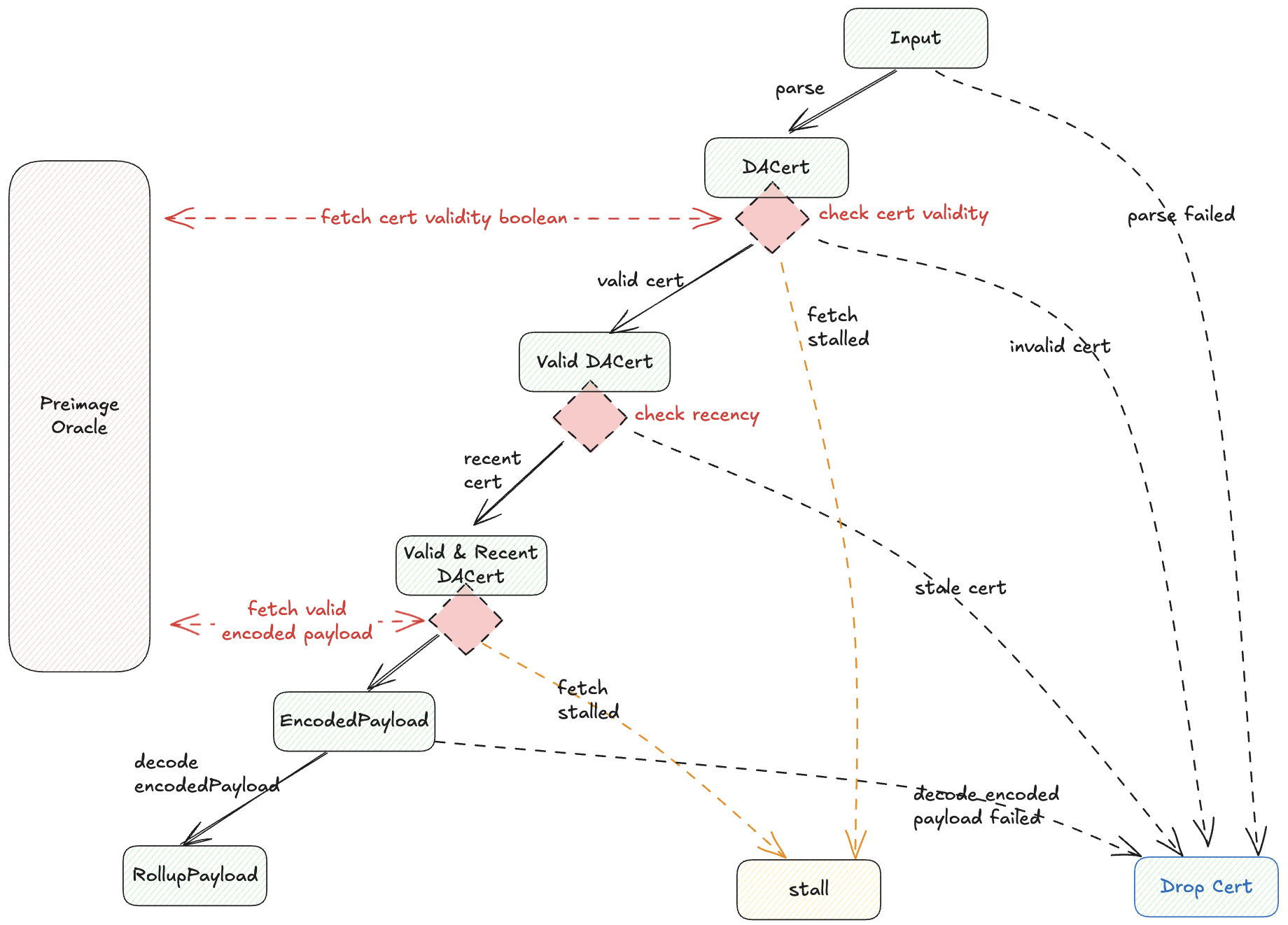

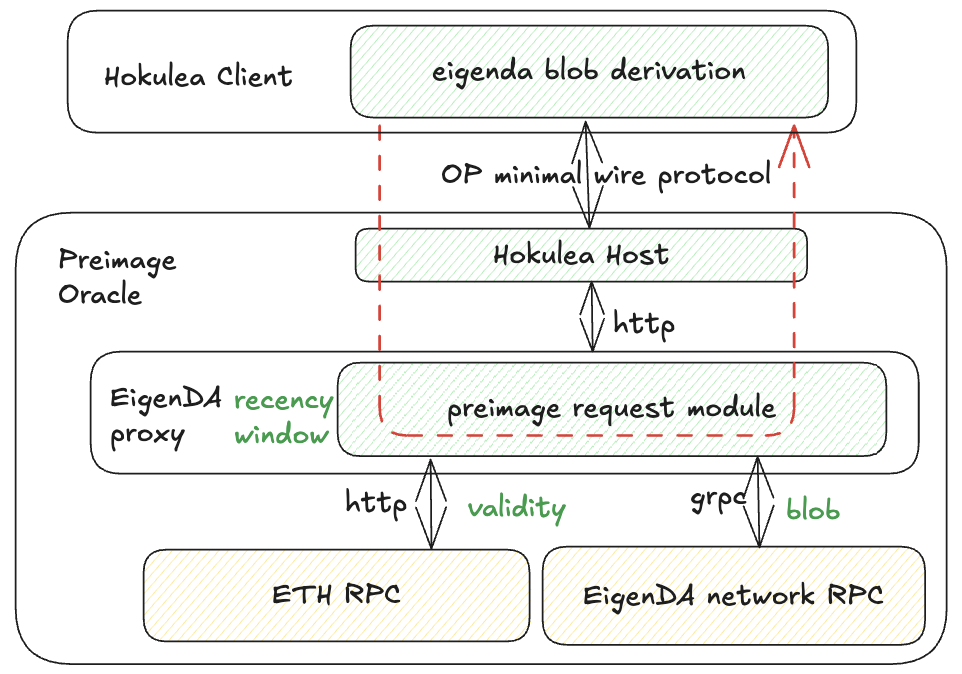

EigenDA Blob Derivation

A sequence of procedures to convert a byte array representing a DA certificate to the final rollup payload.

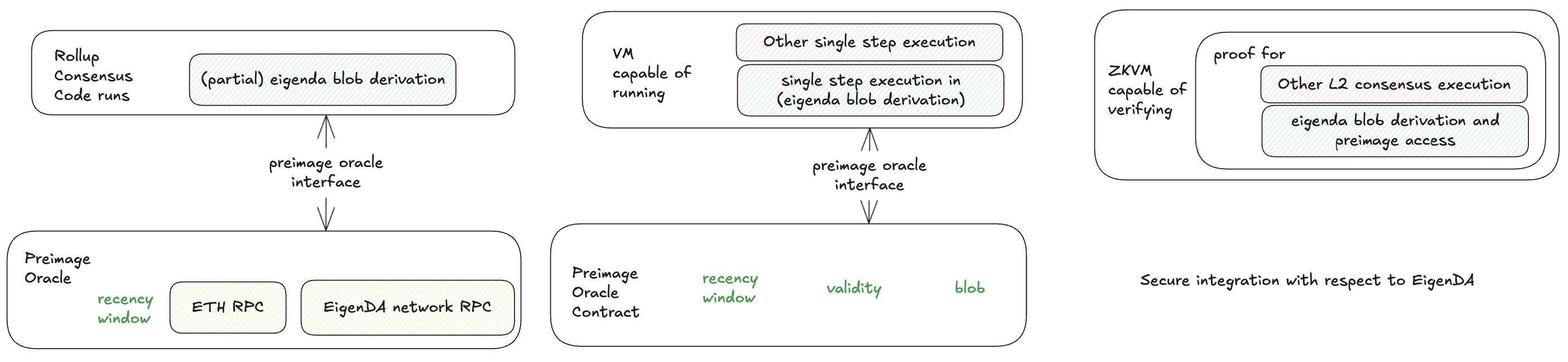

Preimage Oracle

An object with an interface for fetching additional data during EigenDA blob derivation by using some keys generated from the data. Multiple implementations of the preimage oracle show up in the EigenDA. In proxy, ETH rpc serves as the preimage oracle for DAcert validity; EigenDA network rpc serves as the preimage oracle for EigenDA blob.

Blob Field Element

EigenDA uses bn254 curve, a field element on the bn254 curve is an integer whose range is 0 <= x < 21888242871839275222246405745257275088548364400416034343698204186575808495617.

EigenDA Protocol

Broken down into 2 main sections.

Core Services

EigenDA Protocol consists of a suite of services that allow for data to be securely stored and retrieved from the validators.

Contracts

System Architecture

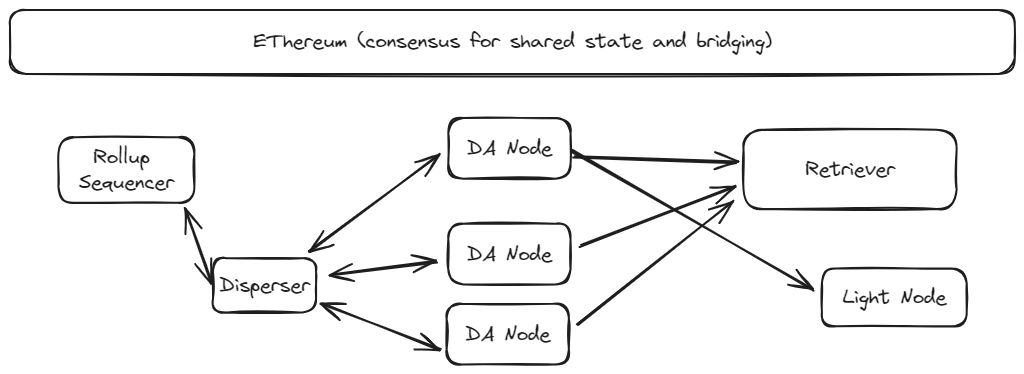

Core Components

- DA nodes are the service providers of EigenDA, storing chunks of blob data for a predefined time period and serving these chunks upon request.

- The disperser is responsible for encoding blobs, distributing them to the DA nodes, and aggregating their digital signatures into a DA attestation. As the disperser is currently centralized, it is trusted for system liveness; the disperser will be decentralized over time.

- The disperser and the DA nodes both depend on the Ethereum L1 for shared state about the DA node registration and stake delegation. The L1 is also currently used to bridge DA attestations to L2 end-user applications such as rollup chains.

Essential flows

Dispersal. The is the flow by which data is made available and consists of the following steps:

- The Disperser receives a collection of blobs, [encodes them], constructs a batch of encoded blobs and headers, and sends the sharded batch to the DA nodes.

- The DA nodes validate their shares of the batch, and return an attestation consisting of a BLS signature of the batch header.

- The disperser collects the attestations from the DA nodes and aggregates them into a single aggregate attestation.

Bridging. For a DA attestation to be consumed by the L2 end-user (e.g. a rollup), the it must be bridged to a chain from which the L2 can read. This might simply be the Ethereum L1 itself, but in many cases it is more economical to bridge directly into the L2 since this drastically decreases signature verification costs. For the time being all attestations are bridged to the L1 by the disperser.

Retrieval. Interested parties such as rollup challengers that want to obtain rollup blob data can retrieve a blob by downloading the encoded chunks from the DA nodes and decoding them. The blob lookup information contained in the request is obtained from the bridged attestation to the DA nodes.

Protocol Overview

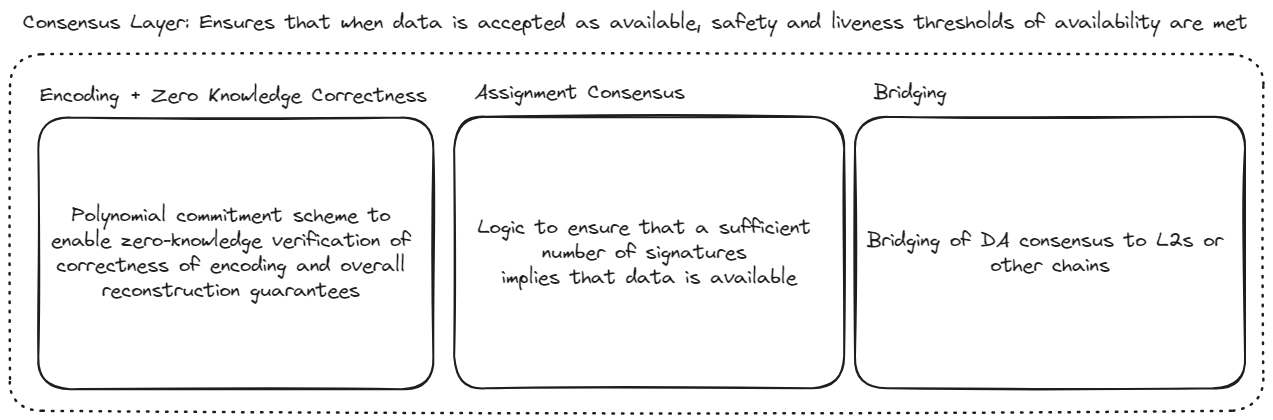

For expositional purposes, we will divide the protocol into two conceptual layers:

- Attestation Layer: Modules to ensure that whenever a DA attestation is accepted by an end-user (e.g. a rollup), then the data is indeed available. More specifically, the attestation layer ensures that the system observes the safety and liveness tolerances defined in the Security Model section.

- Network Layer: The communications protocol which ensures that the liveness and safety of the protocol are robust against network-level events and threats.

Attestation Layer

The attest layer is responsible for ensuring that when the network-level assumptions and safety and liveness tolerances are observed, the system properly makes data available.

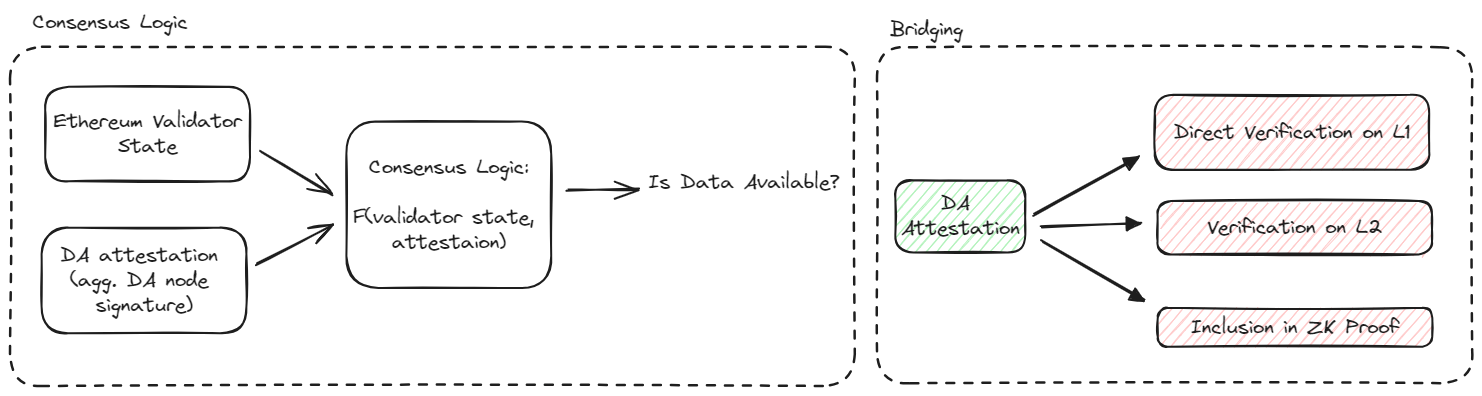

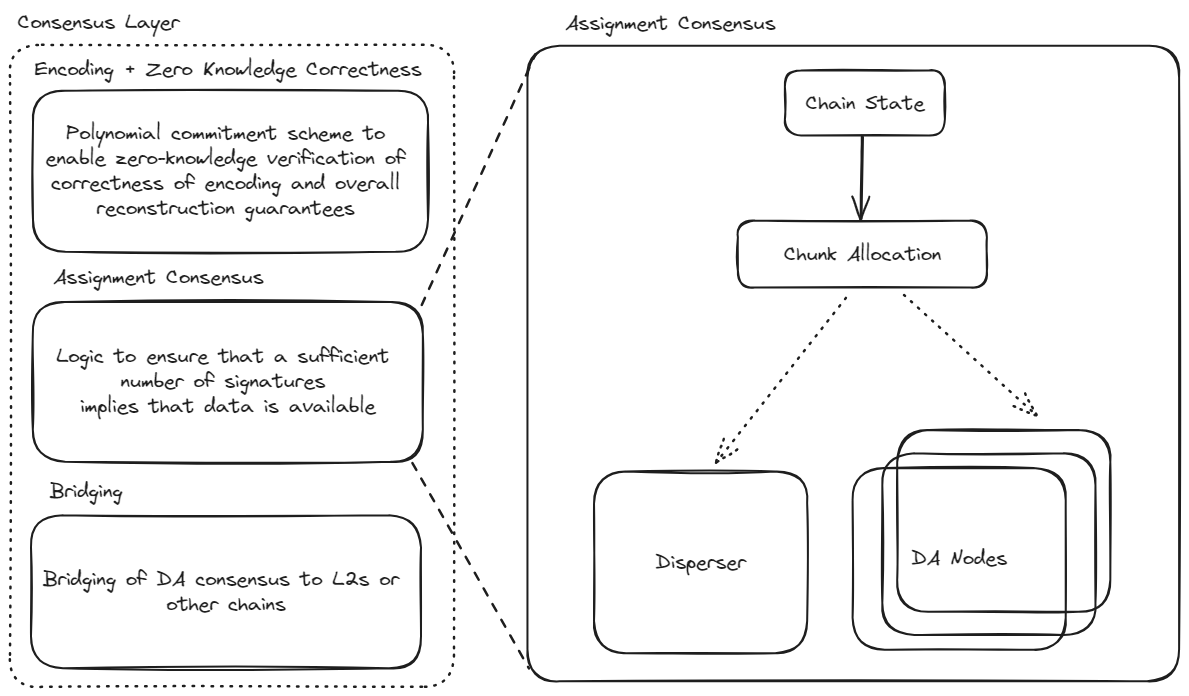

The primary responsibility of the attestation layer is to enable consensus about whether a given blob of data is fully within the custody of a set of honest nodes. (Here, what can be taken to be a set of honest nodes is defined by the system safety tolerance and the assurance that these honest nodes will be able to transmit the data to honest retrievers is handled by the network layer.) Since EigenDA is an EigenLayer AVS it does not need its own actual consensus protocol, but can instead piggy-back off of Ethereum's consensus. As a result, the attestation layer decomposes into two fairly straightforward pieces:

- Attestation Logic: The attestation logic allows us to answer the question of whether a given blob is available, given both a DA attestation and the validator state at the associated Ethereum block. The attestation logic can be understood as simply a function of these inputs which outputs yes or no, depending on whether these inputs imply that data is available. Naturally, this function is grounded upon assumptions about the behavior of honest nodes, which must perform certain validation actions as part of the attestation layer. The attestation logic further decomposes into two major modules:

- Encoding: The encoding module defines a procedure for blobs to be encoded in such a way that their successful reconstruction can be guaranteed given a large enough collection of unique encoded chunks. The procedure also allows for the chunks to be trustlessly verified against a blob commitment so that the disperser cannot violate the protocol.

- Assignment: The assignment module provides a deterministic mapping from validator state to an allocation of encoded chunks to DA nodes. The mapping is designed to uphold safety and liveness properties with minimal data-inefficiency.

- Bridging: Bridging describes how the attestation is bridged to the consumer protocol, such as that of the rollup. In principle, bridging can be performed in one of several different ways in order to optimize efficiency and composability. At the moment, only bridging via the Ethereum L1 is directly supported.

The desired behavior of the attestation logic can be formally described as follows (Ignore this if you're happy with the high level ideas): Let \(\alpha\) denote the safety threshold, i.e. the maximum proportion of adversarial stake that the system is able to tolerate. Likewise, let \(\beta\) represent the amount of stake that we require to be held by the signing operators in order to accept an attestation, i.e. one minus the liveness threshold. Also, let \(O\) denote the set of EigenDA operators.

We need to guarantee that any set of signing operators \(U_q \subseteq O\) such that

and any set of adversarial operators such

we can reconstruct the original data blob from the chunks held by \( U_q \setminus U_a \).

Encoding Module

The encoding module defines a procedure for blobs to be encoded in such a way that their successful reconstruction can be guaranteed given a large enough collection of unique encoded chunks. The procedure also allows for the chunks to be trustlessly verified against a blob commitment so that the disperser cannot violate the protocol.

Assignment Module

The assignment module is nothing more than a rule which takes in the Ethereum chain state and outputs an allocation of chunks to DA operators.

Signature verification and bridging

See the integration contracts section for details on how the attestation is bridged to the consumer protocol, such as that of the rollup.

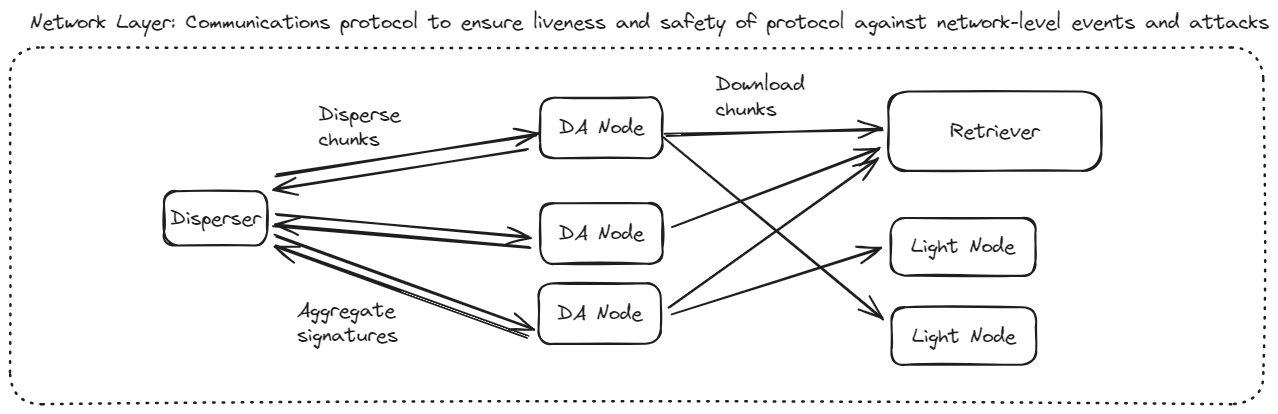

Network Layer

The network layer is described in the Write and Read Workflow, which explains how each component interacts when writing to and reading from EigenDA.

Encoding Module

The encoding module defines a procedure for blobs to be encoded in such a way that their successful reconstruction can be guaranteed given a large enough collection of unique encoded chunks. The procedure also allows for the chunks to be trustlessly verified against a blob commitment so that the disperser cannot violate the protocol.

One way to think of the encoding module is that it must satisfy the following security requirements:

- Adversarial tolerance for DA nodes: We need to have tolerance to arbitrary adversarial behavior by any number of DA nodes up to some threshold. Note that while simple sharding approaches such as duplicating slices of the blob data have good tolerance to random node dropout, they have poor tolerance to worst-case adversarial behavior.

- Adversarial tolerance for disperser: We do not want to put trust assumptions on the encoder or rely on fraud proofs to detect if an encoding is done incorrectly.

Trustless Encoding via KZG and Reed-Solomon

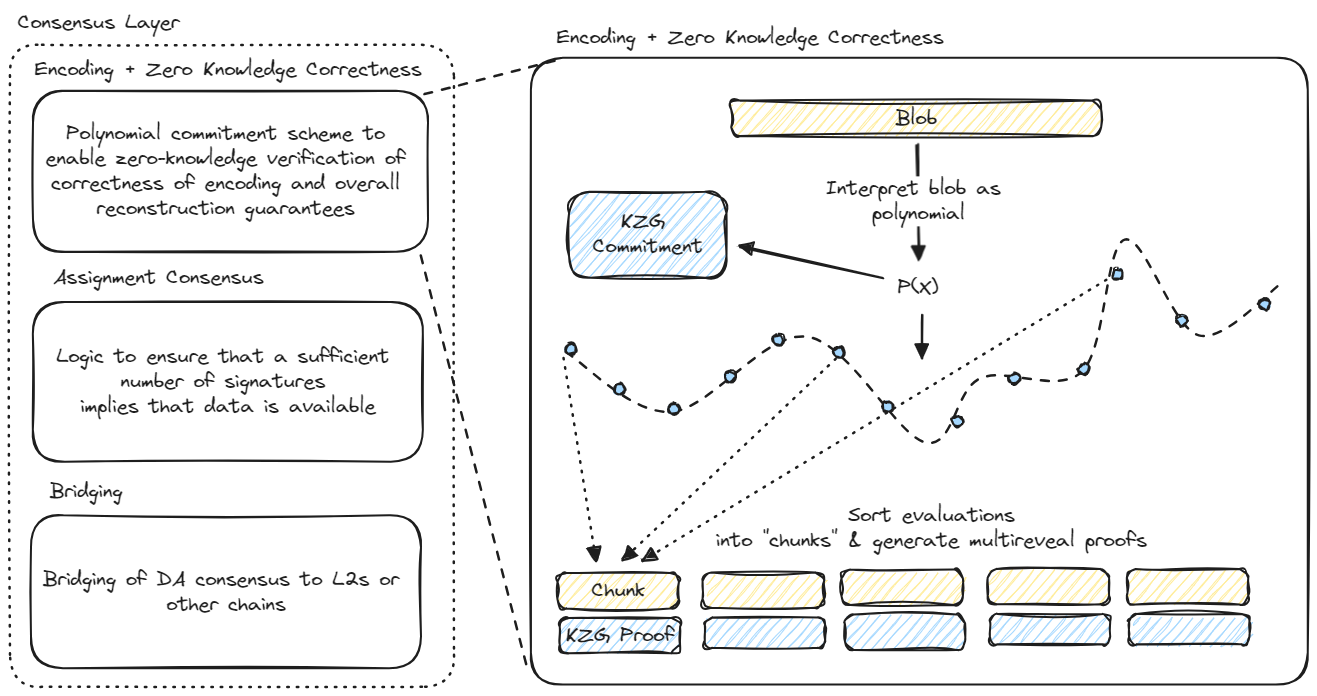

EigenDA uses a combination of Reed-Solomon (RS) erasure coding and KZG polynomial commitments to perform trustless encoding. In this section, we provide a high level overview of how the EigenDA encoding module works and how it achieves these properties.

Reed Solomon Encoding

Basic RS encoding is used to achieve the first requirement of Adversarial tolerance for DA nodes. This looks like the following:

- The blob data is represented as a string of symbols, where each symbol is elements in a certain finite field. The number of symbols is called the

BlobLength - These symbols are interpreted as the coefficients of a

BlobLength-1 degree polynomial. - This polynomial is evaluated at

NumChunks*ChunkLengthdistinct indices. - Chunks are constructed, where each chunk consists of the polynomial evaluations at

ChunkLengthdistinct indices.

Notice that given any number of chunks such that ChunkLength >= BlobLength, via polynomial interpolation it is possible to reconstruct the original polynomial, and therefore its coefficients which represent the original blob.

Validation via KZG

To address the requirement Adversarial tolerance for disperser using RS encoding alone requires fraud proofs: a challenger must download all of the encoded chunks and check that they lie on a polynomial corresponding to the blob commitment.

To avoid the need for fraud proofs, EigenDA follows the trail blazed by the Ethereum DA sharding roadmap in using KZG polynomial commitments.

Chunk Validation

Blobs sent to EigenDA are identified by their KZG commitment (which can be calculated by the disperser and easily validated by the rollup sequencer). When the disperser generates the encoded blob chunks, it also generates a collection of opening proofs which the DA nodes can use to trustlessly verify that their chunks fall on the blob polynomial at the correct indices (note: the indices are jointly derived by the disperser and DA nodes from the chain state using the logic in the Assignment module to ensure that the evaluation indices for each node are unique).

Blob Size Verification KZG commitments also can be used to verify the degree of the original polynomial, which in turn corresponds to the size of the original blob. Having a trustlessly verifiable upper bound on the size of the blob is necessary for DA nodes to verify the correctness of the chunk assignment defined by the assignment module.

The KZG commitment relies on a structured reference string (SRS) containing a generator point multiplied by all of the powers of some secret field element , up to some maximum power . This means that it is not possible to use this SRS to commit to a polynomial of degree greater than . A consequence of this is that if is a polynomial of degree greater than , it will not be possible to commit to the polynomial . A "valid" commitment to the polynomial thus constitutes a proof that the polynomial is of degree less than or equal to .

In practice, this looks like the following:

- If the disperser wishes to claim that the polynomial is of degree less than or equal to , they must provide along with the commitment to , a commitment to .

- The verifier then performs the pairing check , where is the G2 generator and is the 'th power of tau. This pairing will only evaluate correctly when was constructed as described above and .

Note: The blob length verification here allows for the blob length to be upper-bounded; it cannot be used to prove the exact blob length.

Prover Optimizations

EigenDA makes use of the results of Fast Amortized Kate Proofs, developed for Ethereum's sharding roadmap, to reduce the computational complexity for proof generation.

See the full discussion

Verifier Optimizations

Without any optimizations, the KZG verification complexity can lead to a computational bottleneck for the DA nodes. Fortunately, the Universal Verification Equation developed for Danksharding data availability sampling dramatically reduces the complexity. EigenDA has implemented this optimization to eliminate this bottleneck for the DA nodes.

Amortized KZG Prover Backend

It is important that the encoding and commitment tasks are able to be performed in seconds and that the dominating complexity of the computation is nearly linear in the degree of the polynomial. This is done using algorithms based on the Fast Fourier Transform (FFT).

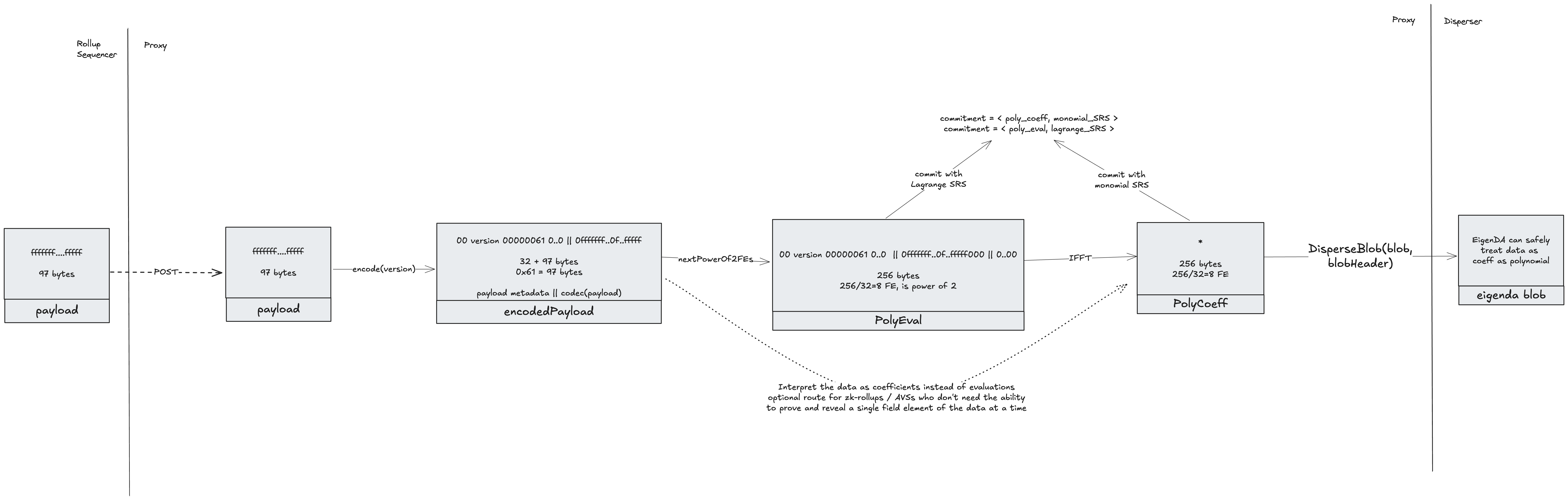

This document describes how the KZG-FFT encoder backend implements the Encode(data [][]byte, params EncodingParams) (BlobCommitments, []*Chunk, error) interface, which 1) transforms the blob into a list of params.NumChunks Chunks, where each chunk is of length params.ChunkLength 2) produces the associated polynomial commitments and proofs.

We will also highlight the additional constraints on the Encoding interface which arise from the KZG-FFT encoder backend.

Deriving the polynomial coefficients and commitment

As described in the Encoding Module Specification, given a blob of data, we convert the blob to a polynomial by simply slicing the data into a string of symbols, and interpreting this list of symbols as the tuple .

In the case of the KZG-FFT encoder, the polynomial lives on the field associated with the BN254 elliptic curve, which as order [TODO: fill in order].

Given this polynomial representation, the KZG commitment can be calculated as in KZG polynomial commitments.

Polynomial Evaluation with the FFT

In order to use a Discrete Fourier Transform (DFT) to evaluate a polynomial, the indices of the polynomial evaluations which will make up the Chunks must be members of a cyclic group, which we will call . A cyclic group is the group generated by taking all of the integer powers of some generator , i.e., (For this reason, the elements of a cyclic group of order will sometimes be referred to as the ’th roots of unity). Notice that since our polynomial lives on the BN254 field, the group must be a subgroup of that field (i.e. all of its elements must lie within that field).

Given a cyclic group of order , we can evaluate a polynomial of order at the indices contained in via the DFT,

where gives the evaluation of the polynomial at . Letting denote the vector of polynomial coefficients and the vector of polynomial evaluations, we can use the shorthand . The inverse relation also holds, i.e., .

To evaluate the DFT programmatically, we want . Notice that we can achieve this when by simply padding with zeros to be of length .

The use of the FFT can levy an additional requirement on the size of the group . In our implementation, we require the size of to be a power of 2. For this, we can make use of the fact that the prime field associated with BN254 contains a subgroup of order , which in turn contains subgroups of orders spanning every power of 2 less than .

As the encoding interface calls for the construction of NumChunks Chunks of length ChunkLength, our application requires that be of size NumChunks*ChunkLength, which in turn must be a power of 2.

Amortized Multireveal Proof Generation with the FFT

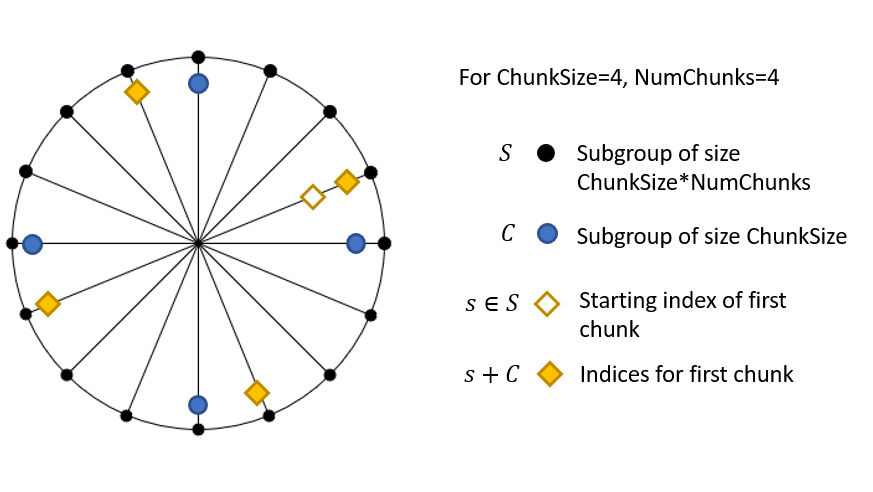

The construction of the multireveal proofs can also be performed using a DFT (as in “Fast Amortized Kate Proofs”). Leaving the full details of this process to the referenced document, we describe here only 1) the index-assignment the scheme used by the amortized multiproof generation approach and 2) the constraints that this creates for the overall encoder interface.

Given the group corresponding to the indices of the polynomial evaluations and a cyclic group which is a subgroup of , the cosets of in are given by

Each coset has size , and there are unique and disjoint cosets.

Given a polynomial and the groups and , the Amortized Kate Proofs approach generates different KZG multi-reveal proofs, where each proof is associated with the evaluation of at the indices contained in a single coset for . Because the Amortized Kate Proofs approach uses the FFT under the hood, itself must have an order which is a power of 2.

For the purposes of the KZG-FFT encoder, this means that we must choose to be of size NumChunks*ChunkLength and to be of size ChunkLength, each of which must be powers of 2.

Worked Example

As a simple illustrative example, suppose that AssignmentCoordinator provides the following parameters in order to meet the security requirements of a given blob:

ChunkLength= 3NumChunks= 4

Supplied with these parameters, Encoder.ParamsFromMins will upgrade ChunkLength to the next highest power of 2, i.e., ChunkLength = 4, and leave NumChunks unchanged. The following figure illustrates how the indices will be assigned across the chunks in this scenario.

Assignment Module

The assignment module determines how encoded blob chunks are allocated to validators based on the Ethereum chain state, specifically validator stakes and quorum memberships. Given the validator state and blob parameters, it produces a deterministic mapping from validators to chunk indices. The mapping ensures that a sufficient number of signatures and honest validators implies that data is available.

The assignment module is implemented in core/v2/assignment.go. For blobs dispersed to multiple quorums, the algorithm employs overlap optimization to minimize storage requirements while maintaining security guarantees.

Chunk Assignment Algorithm within One Quorum

The chunk assignment scheme assigns encoded chunks to validators proportionally to their stake, ensuring that any coalition of validators with sufficient combined stake can reconstruct the blob.

Given:

- A set of validators with stakes , where

- A set of chunks to be assigned to the validators

Within a single quorum, the number of chunks assigned to validator is:

c_i = \lceil \eta_i(c - n) \rceil

This assignment ensures that the total number of assigned chunks is less than or equal to the total number of chunks , since .

This guarantees that the chunks assigned to validators within a quorum are non-overlapping. In other words, each validator in a quorum contributes distinct chunks for reconstruction. The proof that any subset of validators with sufficient combined stake can reconstruct the blob is provided in Security Parameters.

Chunk Assignment for Multiple Quorums

EigenDA supports blobs dispersed to multiple quorums simultaneously. The security threshold is guaranteed to hold for each quorum independently, as shown in the previous section. The multi-quorum assignment algorithm minimizes storage requirements through overlap optimization while maintaining security guarantees.

Storage Optimization Strategy

The assignment algorithm uses two key strategies to minimize storage:

-

Chunk Overlap Maximization: When a validator participates in multiple quorums for the same blob, the algorithm reuses the same chunk indices across quorums whenever possible.

-

Reconstruction Capping: Each validator is assigned at most the number of chunks needed to independently reconstruct the blob.

Example: Consider a validator with 5% stake in quorum 0 and 15% stake in quorum 1. Without optimization, the validator might receive two non-overlapping sets of chunks (one per quorum), totaling up to 20% of all chunks. With overlap optimization, the validator stores only max(chunks_quorum_0, chunks_quorum_1) unique chunks, which is 15% of the total chunks. With reconstruction capping, if the coding rate is , the validator only needs to store 1/8 of the total chunks.

Algorithm Components

The multi-quorum assignment algorithm consists of four key functions:

1. GetAssignmentsForQuorum: Calculates assignments for a single quorum independently using the stake-proportional algorithm described above.

2. AddAssignmentsForQuorum: Generates the assignment for a new quorum while maximizing overlap with a baseline quorum assignment through a two-phase process:

-

Phase 1 (Overlap Maximization): For each validator, reuse as many chunk indices as possible from the baseline quorum assignment, up to the number required for the new quorum. Mark these reused indices as "used."

-

Phase 2 (Gap Filling): Distribute the remaining unused chunk indices to validators who need additional chunks beyond what was reused from the baseline, ensuring each validator receives their stake-proportional allocation in the new quorum.

This algorithm guarantees that validators participating in both quorums store only max(chunks_in_quorum_1, chunks_in_quorum_2) unique chunks rather than the sum.

3. MergeAssignmentsAndCap: Merges assignments across all quorums and caps the total at the reconstruction threshold:

\text{max\_chunks} = c \cdot \gamma

where is the total number of chunks and is the coding rate. This cap exists because once a validator has enough unique chunks to reconstruct the blob, additional chunks provide no incremental security benefit. Therefore, pruning the extra chunks improves performance and reduces storage and bandwidth requirements without affecting security.

4. GetAssignmentsForBlob: Coordinates the full multi-quorum assignment process:

- Generate the assignment for quorum 0 using

GetAssignmentsForQuorum - Generate assignments for all other quorums using

AddAssignmentsForQuorumwith quorum 0 as the baseline - Merge all per-quorum assignments using

MergeAssignmentsAndCapto produce the final assignment for each validator

Note on Optimality: The algorithm produces optimal storage assignments for two quorums. For three or more quorums, the assignment is not guaranteed to be globally optimal. Since quorums 0 and 1 are the "default" quorums and are expected to be the larger than custom quorums (i.e. containing the most validators), the algorithm achieves near-optimal storage reduction for the majority of validators.

Code Walkthrough

Notation note: In the code, we sometimes use the term operator to refer to a validator, although validator is now the preferred term.

Location: core/v2/assignment.go

Data Structure:

type Assignment struct {

Indices []uint32 // Explicit list of chunk indices (non-contiguous)

}

Core Functions:

1. GetAssignmentsForQuorum (core/v2/assignment.go:40-90)

Assigns chunks for a single quorum with deterministic ordering:

func GetAssignmentsForQuorum(

state *core.OperatorState,

blobParams *core.BlobVersionParameters,

quorum core.QuorumID,

) (map[core.OperatorID]*Assignment, []core.OperatorID, error)

Algorithm:

- Sort operators by hex ID for determinism

- Calculate effective chunks:

effectiveNumChunks = NumChunks - MaxNumOperators - For each operator :

chunksForOperator = ceil((effectiveNumChunks × stake_i) / totalStake) - Assign contiguous indices starting from offset 0

- Return assignments and ordered operator list

2. AddAssignmentsForQuorum (core/v2/assignment.go:99-161)

Maximizes overlap with a baseline assignment:

func AddAssignmentsForQuorum(

assignments map[core.OperatorID]*Assignment, // Baseline from first quorum

state *core.OperatorState,

blobParams *core.BlobVersionParameters,

quorum core.QuorumID,

) (map[core.OperatorID]*Assignment, error)

Two-phase algorithm:

- Phase 1 (Lines 115-136): For each operator, reuse indices from baseline up to their allotted count for this quorum

- Phase 2 (Lines 145-158): Distribute unused indices to operators needing more chunks

3. MergeAssignmentsAndCap (core/v2/assignment.go:167-220)

func MergeAssignmentsAndCap(

assignments []map[core.OperatorID]*Assignment,

blobParams *core.BlobVersionParameters,

) map[core.OperatorID]Assignment

Merges all quorum assignments and caps at maxChunks = NumChunks / CodingRate

4. GetAssignmentsForBlob (core/v2/assignment.go:227-266)

Main entry point coordinating the full multi-quorum assignment:

func GetAssignmentsForBlob(

state *core.OperatorState,

blobParams *core.BlobVersionParameters,

quorums []core.QuorumID,

) (map[core.OperatorID]Assignment, error) {

// Sort quorums for determinism

sort.Slice(quorums, ...)

// Process first quorum

assignmentsList[0], _, err = GetAssignmentsForQuorum(state, blobParams, quorums[0])

// Process remaining quorums with overlap optimization

for i := 1; i < len(quorums); i++ {

assignmentsList[i], err = AddAssignmentsForQuorum(

assignmentsList[0], state, blobParams, quorums[i])

}

// Merge and cap

return MergeAssignmentsAndCap(assignmentsList, blobParams)

}

Usage in Node Chunk Download (node/node_v2.go:40-105):

func (n *Node) DetermineChunkLocations(

batch *corev2.Batch,

operatorState *core.OperatorState,

) {

for _, cert := range batch.BlobCertificates {

// Get assignment for this operator across ALL quorums in the blob

assgn, err := corev2.GetAssignmentForBlob(

operatorState,

blobParams,

cert.BlobHeader.QuorumNumbers, // Multiple quorums

n.Config.ID)

// Request specific chunk indices from relay

req.chunkRequests = append(req.chunkRequests, &relay.ChunkRequestByIndex{

BlobKey: blobKey,

Indices: assgn.Indices, // Explicit indices with overlap optimization

})

}

}

Usage in Validation (core/v2/validator.go:49-79):

func (v *shardValidator) validateBlobParams(

blob *BlobShard,

blobParams *core.BlobVersionParameters,

operatorState *core.OperatorState,

) (*Assignment, error) {

// Get assignment across all quorums for this blob

assignment, err := GetAssignmentForBlob(

operatorState,

blobParams,

blob.BlobHeader.QuorumNumbers, // All quorums

v.operatorID)

// Validate chunk count

if assignment.NumChunks() != uint32(len(blob.Bundle)) {

return error

}

// Validate chunk lengths

for _, chunk := range blob.Bundle {

if chunk.Length() != expectedChunkLength {

return error

}

}

return &assignment, nil

}

Security Parameters

This page proves the relationship between blob parameters and security thresholds. We also point readers to the code where security threshold constraints are implemented.

Blob Parameters and Reconstruction Threshold

In this part, we present the blob parameters and use these parameters to derive the reconstricution threshold.

Blob Parameters

We define the Blob parameters as a tuple where:

- (

MaxNumOperators): Maximum number of validators allowed in EigenDA. - (

NumChunks): The total number of encoded chunks after erasure coding (must be a power of 2). - (

1/CodingRate): The ratio of original data to total encoded chunks, providing redundancy (must be an inverse power of 2). Note that for representational purposes, theCodingRatein our code is the inverse of , while is the the standard coding rate used in coding theory.

Among the blob parameters, CodingRate and NumChunks are used in the encoding process, while NumChunks and MaxNumOperators are used in the chunk assignment process.

This tuple is stored in the struct shown below (see in the code):

struct VersionedBlobParams {

uint32 maxNumOperators;

uint32 numChunks;

uint8 codingRate;

}

The blob parameters for each version is stored in the EigenDAThresholdRegistry contract.

It's configured here and the default parameters are shown below.

versionedBlobParams = [

{ 0_maxNumOperators = 3537, 1_numChunks = 8192, 2_codingRate = 8 }

]

Note on MaxNumOperators

The MaxNumOperators parameter (n = 3537) serves as an upper bound used in the chunk assignment algorithm and security threshold derivations. This upper bound ensures that the reconstruction threshold and other security properties remain fixed and predictable, regardless of how many validators actually register.

The actual number of validators allowed to register for each quorum is controlled separately by the on-chain maxOperatorCount parameter in the OperatorSetParam struct. The current on-chain limits per quorum are:

- Quorum 0 (ETH): 200 validators

- Quorum 1 (EIGEN): 200 validators

- Quorum 2 (Custom): 15 validators

The per-quorum limits can be adjusted via governance without requiring changes to the blob parameters or security thresholds, as long as they remain below the upper bound.

For more details on how maxOperatorCount is enforced during operator registration, see the EigenDARegistryCoordinator

contract.

Reconstruction Threshold

We define ReconstructionThreshold, also denoted as , the minimum fraction of total stake required to reconstruct the blob.

In this section, we prove that, with our chunk assignment algorithm, the reconstruction threshold is:

, where .

In other words, we want to prove that any subset of validators with of total stake collectively own enough chunks to reconstruct the original blob.

Formally, we need to show that for any set of validators with total stake , the chunks assigned to satisfy .

Proof:

By the chunk assignment scheme, we have:

Therefore, since , we have:

Now, we prove that any subset of validators with of the total stake own at least chunks, which is guaranteed to reconstruct the origianl blob due to the property of Reed-Solomon encoding.

As we show in the previous subsection, by default, , and , which gives us the reconstruction threshold .

Intuition: Loss in Chunk Assignment

If we look closely at the reconstruction threshold, we find that it is given by the encoding rate multiplied by a factor:

This means that in practice, a group of validators needs to hold more stake than the theoretical threshold to guarantee reconstruction.

In an ideal world, any subset of validators holding a fraction of the total stake would also hold of the chunks, and therefore could recover the blob. But in reality, because chunk assignments are discrete, some loss occurs: a validator’s assigned share of chunks can be less than its stake share.

Suppose there are 10 chunks and 3 validators, each with one-third of the stake. Using the assignment algorithm, we might get:

- Validator 1 → 4 chunks

- Validator 2 → 3 chunks

- Validator 3 → 3 chunks

Here, Validator 2 has 33% of the stake but only 30% of the chunks. This loss can make the difference in meeting the reconstruction threshold.

The mismatch becomes even more pronounced as the number of validators increases.

Imagine 10 million validators, each with equal stake, but only 10,000 chunks to be assigned in total. In this case, only a small fraction of validators can get at least 1 chunk, while the majority get none at all. The loss is enormous.

This is why the MaxNumOperators becomes an important parameter in determining the reconstruction threshold: the more validators there are relative to the number of chunks, the higher the loss from assignment imbalance.

BFT Security

Having established the relationship between the blob parameters and the reconstruction threshold, we now turn to the Byzantine Fault Tolerant (BFT) security model and how it relates to the blob parameters.

Definition of Security Thresholds

In this section, we define and prove the safety and liveness properties of EigenDA, building on the reconstruction property established above.

The Byzantine liveness and safety properties of a blob are specified by a collection of SecurityThresholds:

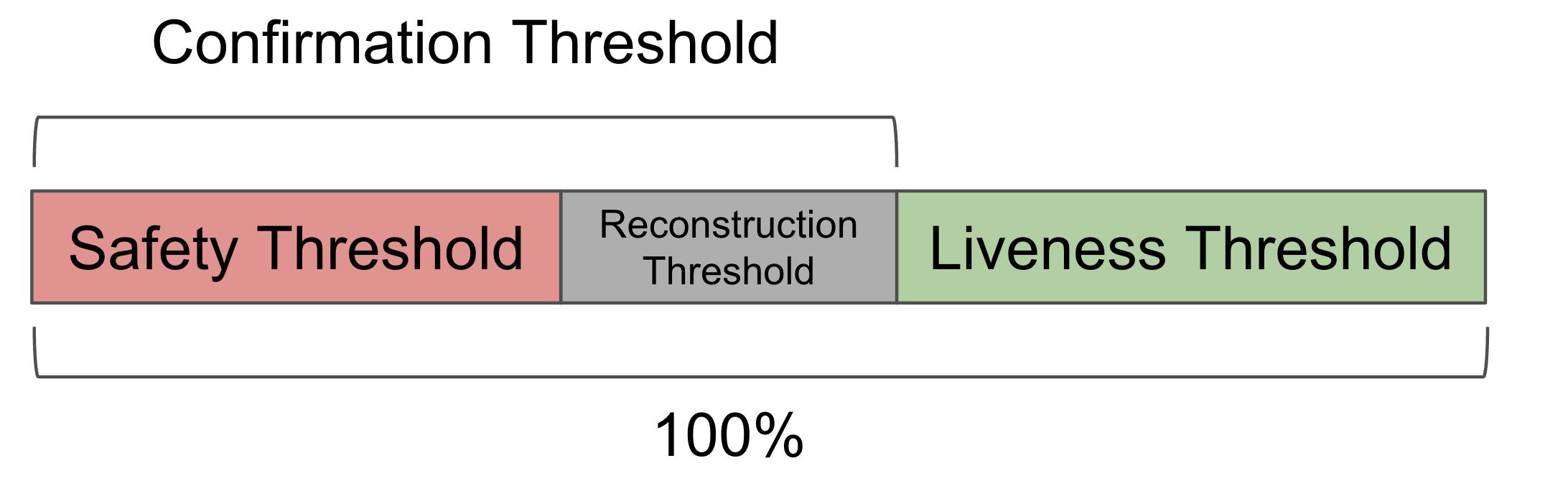

ConfirmationThreshold- The confirmation threshold defines the minimum percentage of stake that must sign to make the DA certificate valid.SafetyThreshold- The safety threshold refers to the minimum percentage of total stake an attacker must control to make a blob with a valid DA certificate unavailable.LivenessThreshold- The liveness threshold refers to the minimum percentage of total stake an attacker must control to cause a liveness failure.

How to Set the Confirmation Threshold

In the BFT security model, the SafetyThreshold and LivenessThreshold are estimated by the client. The SafetyThreshold is the maximum stake controlled by an adversary that signs the certificate but fails to serve the data, while the LivenessThreshold is the maximum stake controlled by an adversary that does not sign the certificates.

The ConfirmationThreshold is set based on the following two criteria:

1. Confirmation Threshold and Safety Threshold

To ensure that each blob with a valid DA certificate is available, the following inequality must be satisfied when setting the ConfirmationThreshold:

ConfirmationThreshold - SafetyThreshold >= ReconstructionThreshold (1)

Intuitively, since the adversary controls less than SafetyThreshold of stake, at least ConfirmationThreshold - SafetyThreshold honest validators need to sign to form a valid DA certificate.

Therefore, as long as ConfirmationThreshold - SafetyThreshold >= ReconstructionThreshold, the honest validators should own a large enough set of chunks to reconstruct the blob.

⚠️

We strongly recommend that users set a SafetyThreshold >= 33% if they ever want to change the default settings.

2. Confirmation Threshold and Liveness Threshold

The ConfirmationThreshold and LivenessThreshold satisfy the following inequality:

ConfirmationThreshold <= 1 - LivenessThreshold (2)

This is because a valid certificate requires signatures from at least ConfirmationThreshold of stake. If ConfirmationThreshold is greater than 1 - LivenessThreshold, the adversary can cause a liveness failure by simply not signing the certificate.

In summary, the SafetyThreshold and LivenessThreshold depends on the choice of ConfirmationThreshold. The picture below shows the relationship between these security thresholds.

A table of the security thresholds is given below for the reader's reference, assuming that the reconstruction threshold is 22%.

| Confirmation Threshold | Safety Threshold | Liveness Threshold |

|---|---|---|

| 55% | 33% | 45% |

| 60% | 38% | 40% |

| 65% | 43% | 35% |

Implementation Details

In our code, we use slightly different names for the security thresholds compared to the notation in this document.

Here is the mapping from the notations in this doc to the variable names in the code:

ConfirmationThreshold→securityThresholds.confirmationThreshold(in percent)SafetyThreshold→securityThresholds.adversaryThreshold(in percent)- →

blobParams.numChunks - →

blobParams.maxNumOperators - → 1 /

blobParams.codingRate

Note that SafetyThreshold is called adversaryThreshold in the code.

Also, securityThresholds.confirmationThreshold and securityThresholds.adversaryThreshold are expressed in percent where the stored integer equals the required percentage.

For example, securityThresholds.confirmationThreshold = 55 means ConfirmationThreshold = 55%.

1. Safety Threshold

The check for the inequality (1) above is implemented as follows (see in code).

// Check for potential underflow: maxNumOperators must not exceed numChunks

if (blobParams.maxNumOperators > blobParams.numChunks) {

revert SecurityAssumptionsNotMet(

...

);

}

uint256 lhs = blobParams.codingRate * (blobParams.numChunks - blobParams.maxNumOperators) * (securityThresholds.confirmationThreshold - securityThresholds.adversaryThreshold);

uint256 rhs = 100 * blobParams.numChunks;

if (!(lhs >= rhs)) {

revert SecurityAssumptionsNotMet(

...

);

}

First, the code confirms that the total number of chunks is greater than the total number of validators so that ReconstructionThreshold is meaningful. Next, it validates the following inequality:

blobParams.codingRate * (blobParams.numChunks - blobParams.maxNumOperators) * (securityThresholds.confirmationThreshold - securityThresholds.adversaryThreshold) >= 100 * blobParams.numChunks

The inequality above can be be rewritten as:

(blobParams.numChunks - blobParams.maxNumOperators) / 100 >= blobParams.numChunks / (blobParams.codingRate * (blobParams.numChunks - blobParams.maxNumOperators))

By substituting the variables using the notation mapping shown at the beginning of this section and simplifying, we get:

(ConfirmationThreshold - SafetyThreshold) >= (c / (c - n)) * γ.

Recall that ReconstructionThreshold = (c / (c - n)) * γ, (c > n)(see more details in Reconstruction Threshold).

Therefore, the inequality above is exactly inequality (1) shown in the previous subsection.

2. Liveness Threshold

The LivenessThreshold does not appear in the code, but users should keep the equation (2) in mind when setting the confirmation ConfirmationThreshold.

System Default

The security threshods are configured as follows (see in the code):

{

"eigenDAServiceManager": "0x3a5acf46ba6890B8536420F4900AC9BC45Df4764",

"eigenDAThresholdRegistry": "0x0DA66C1930Acc54809093Bb42f2e6a4bE21d5403",

"defaultSecurityThresholds": {

"0_confirmationThreshold": 55,

"1_adversaryThreshold": 33

},

"quorumNumbersRequired": "0x0001"

}

By default, the ConfirmationThreshold is 55%. With the default ReconstructionThreshold = 22%, the default ConfirmationThreshold gives a SafetyThreshold of 33% and a LivenessThreshold of 45%.

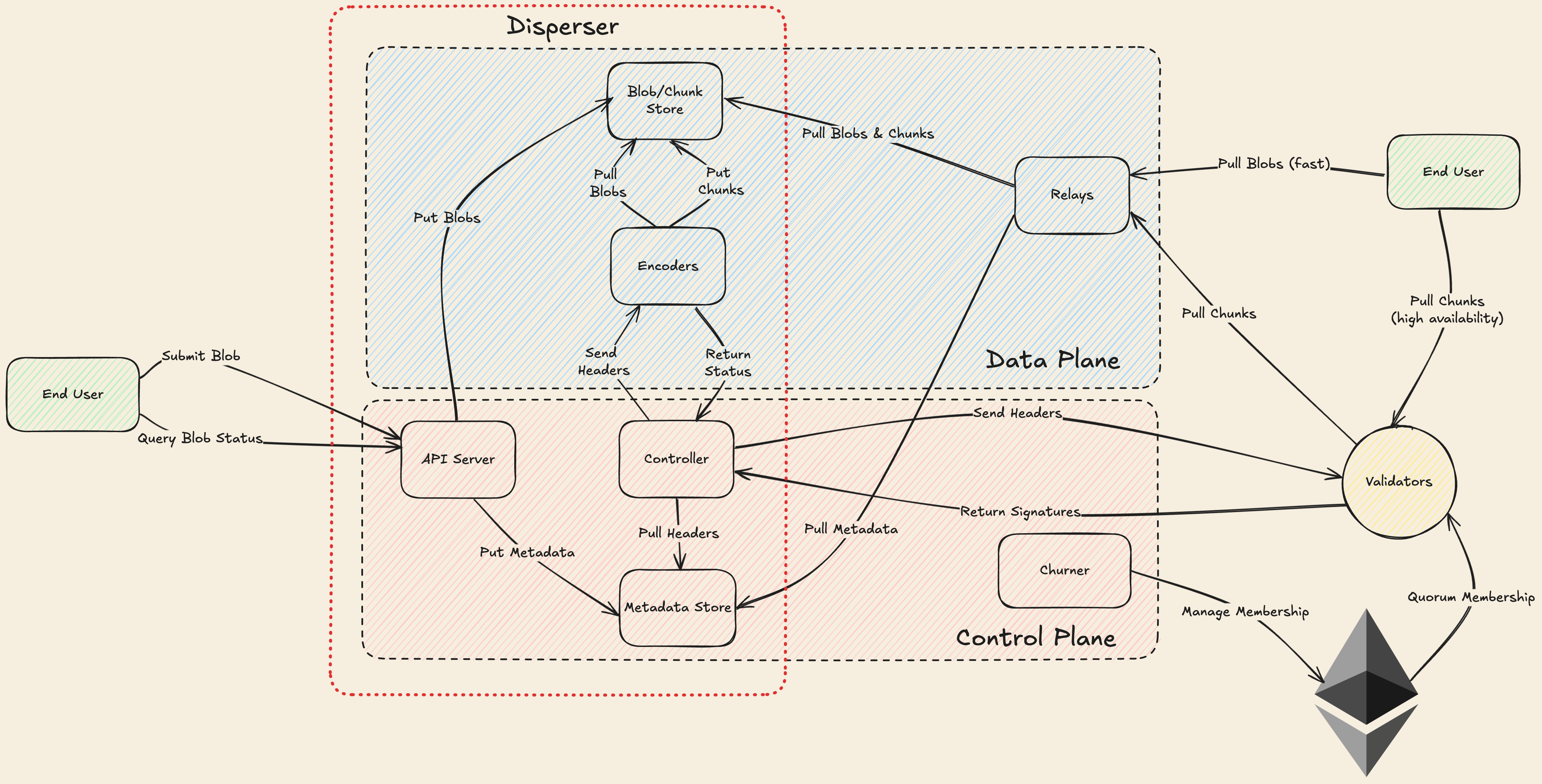

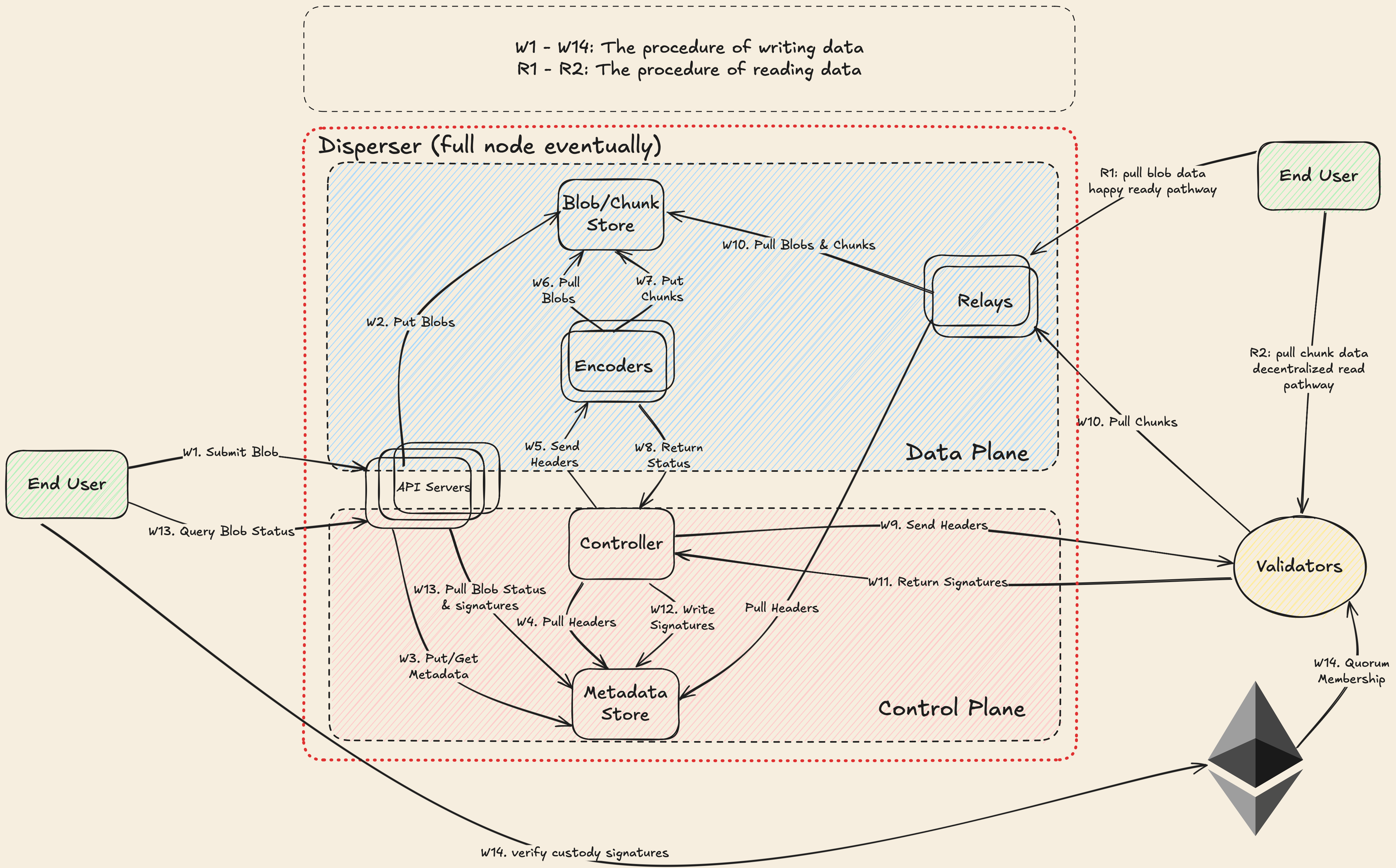

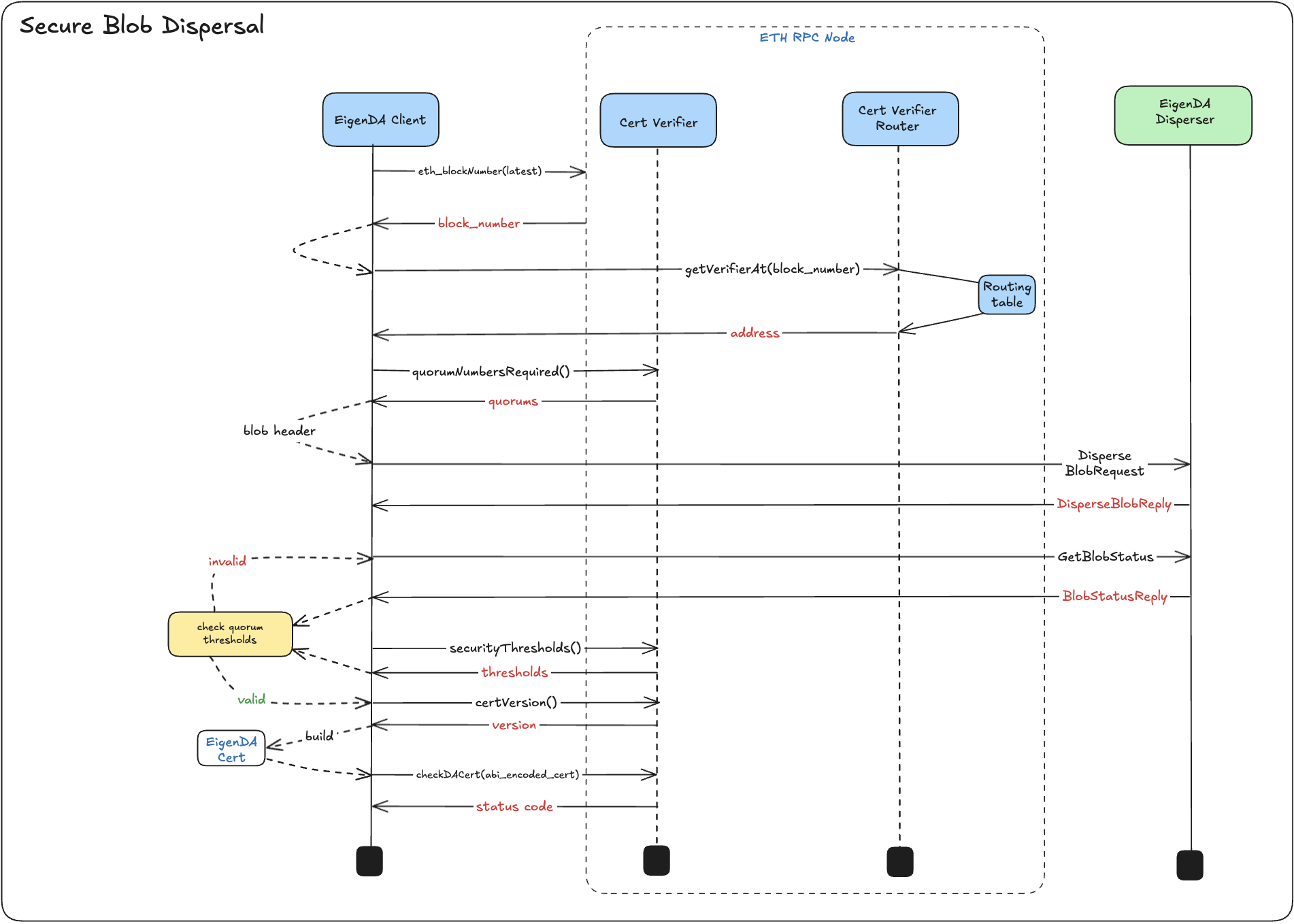

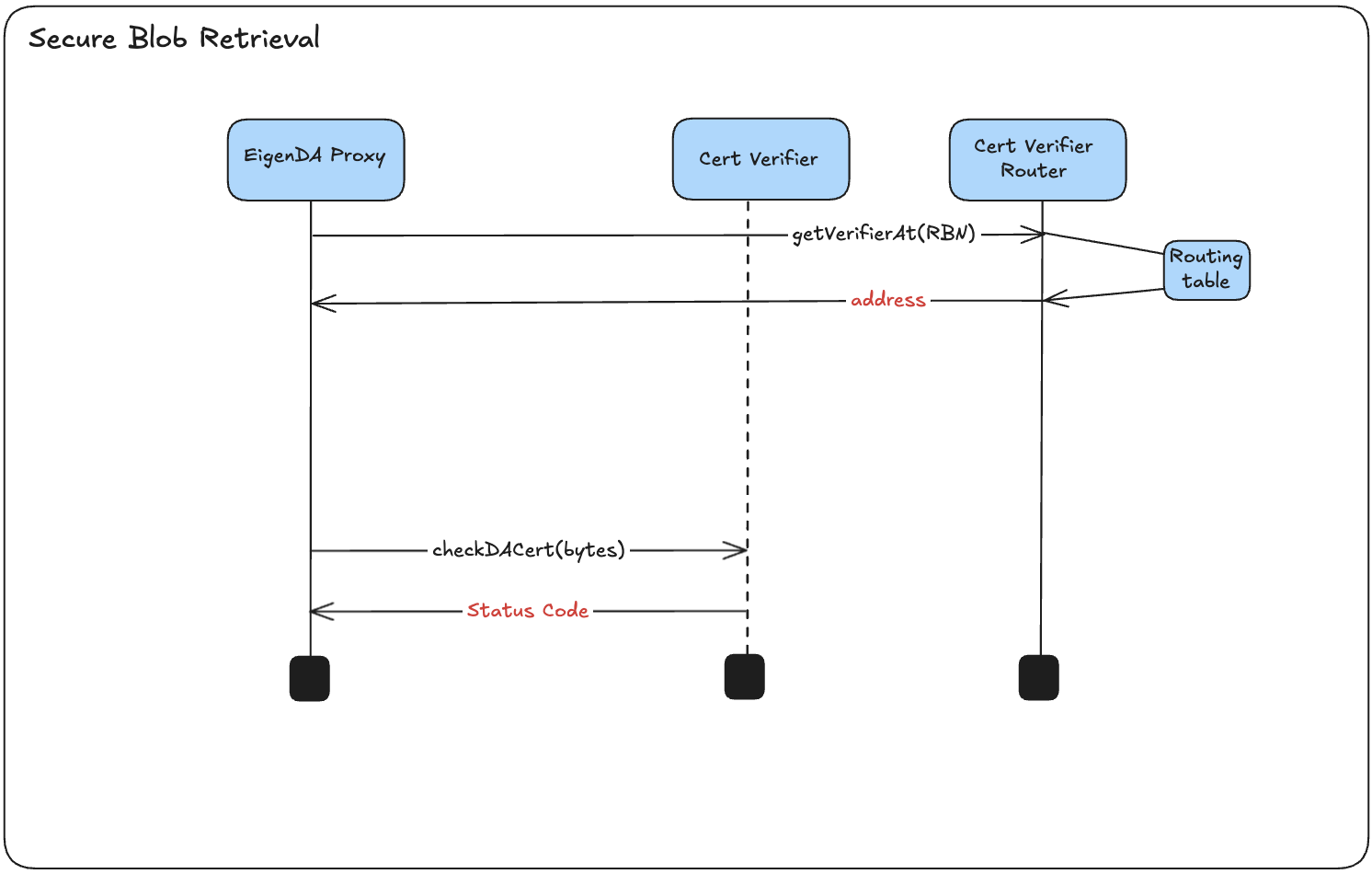

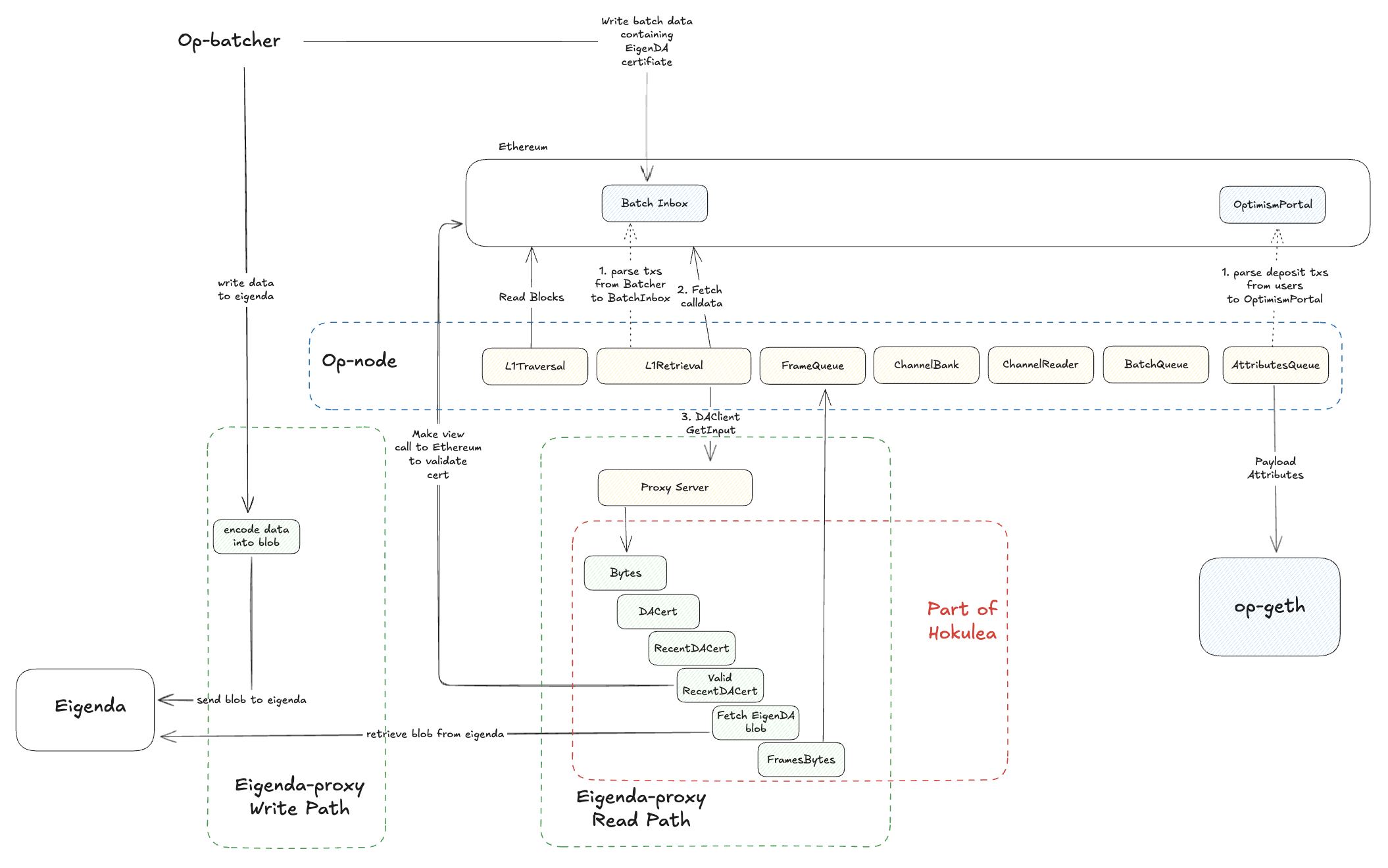

Write and Read Workflow

This page provides an overview of the workflow for writing data to and reading data from EigenDA. The workflow is illustrated in the diagram below.

Notes:

- The "end user" for writing and the "end user" for reading can be the same entity. They are shown separately in the diagram for clarity.

- We are planning to build full nodes that will perform the disperser's functionality plus additional duties.

Write

When a user writes data to EigenDA (in the form of a blob), the blob is encoded into chunks and distributed to the validators in accordance with the Chunk Assignment Logic. After enough validators have acknowledged receipt of their chunks and returned their signatures to the disperser, the disperser aggregates the signatures into a data availability (DA) certificate and sends it to the user upon request.

The write process follows the sequence below. The labels in parentheses (e.g., W1, W2) correspond to the steps shown in the diagram above.

-

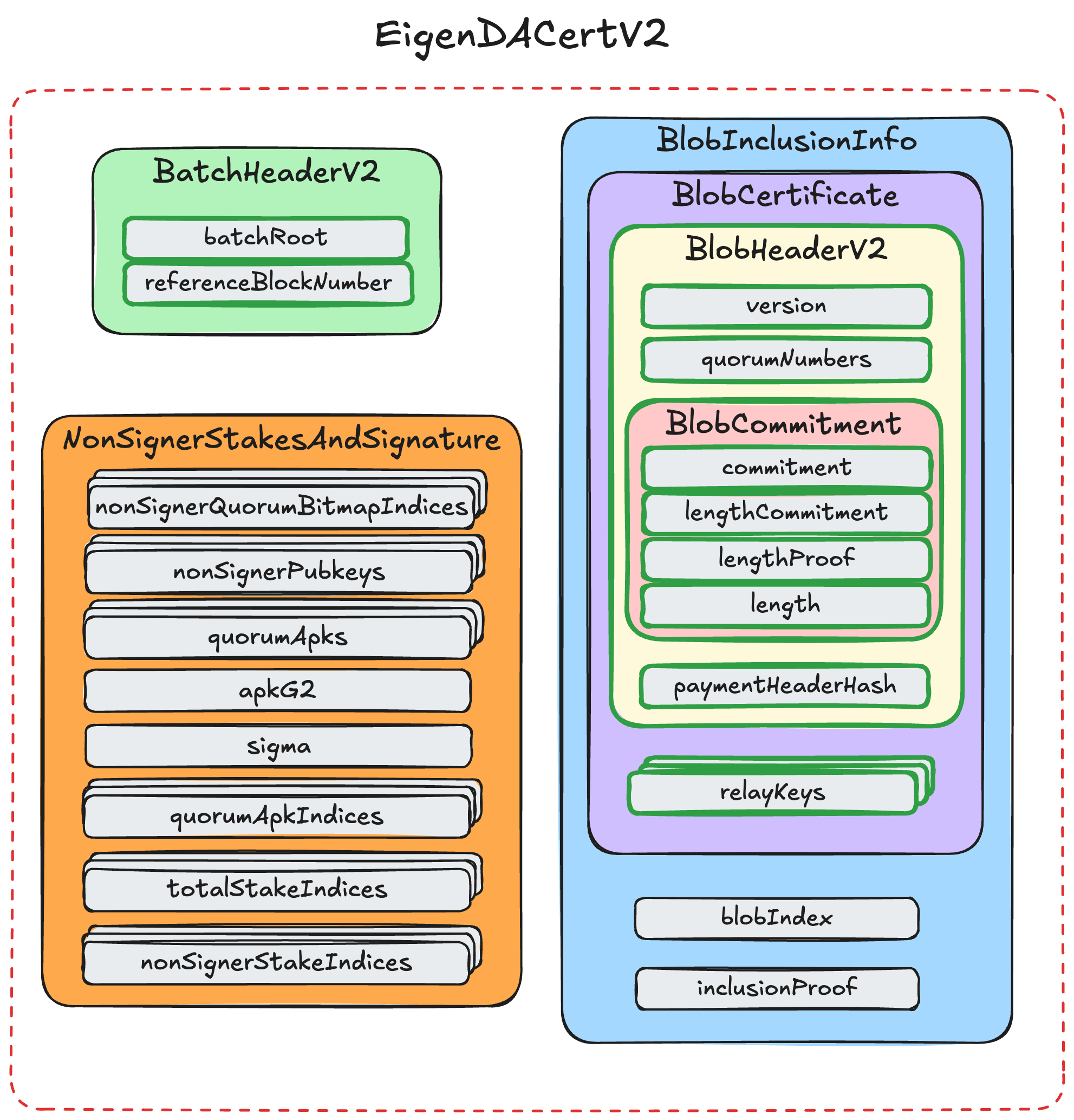

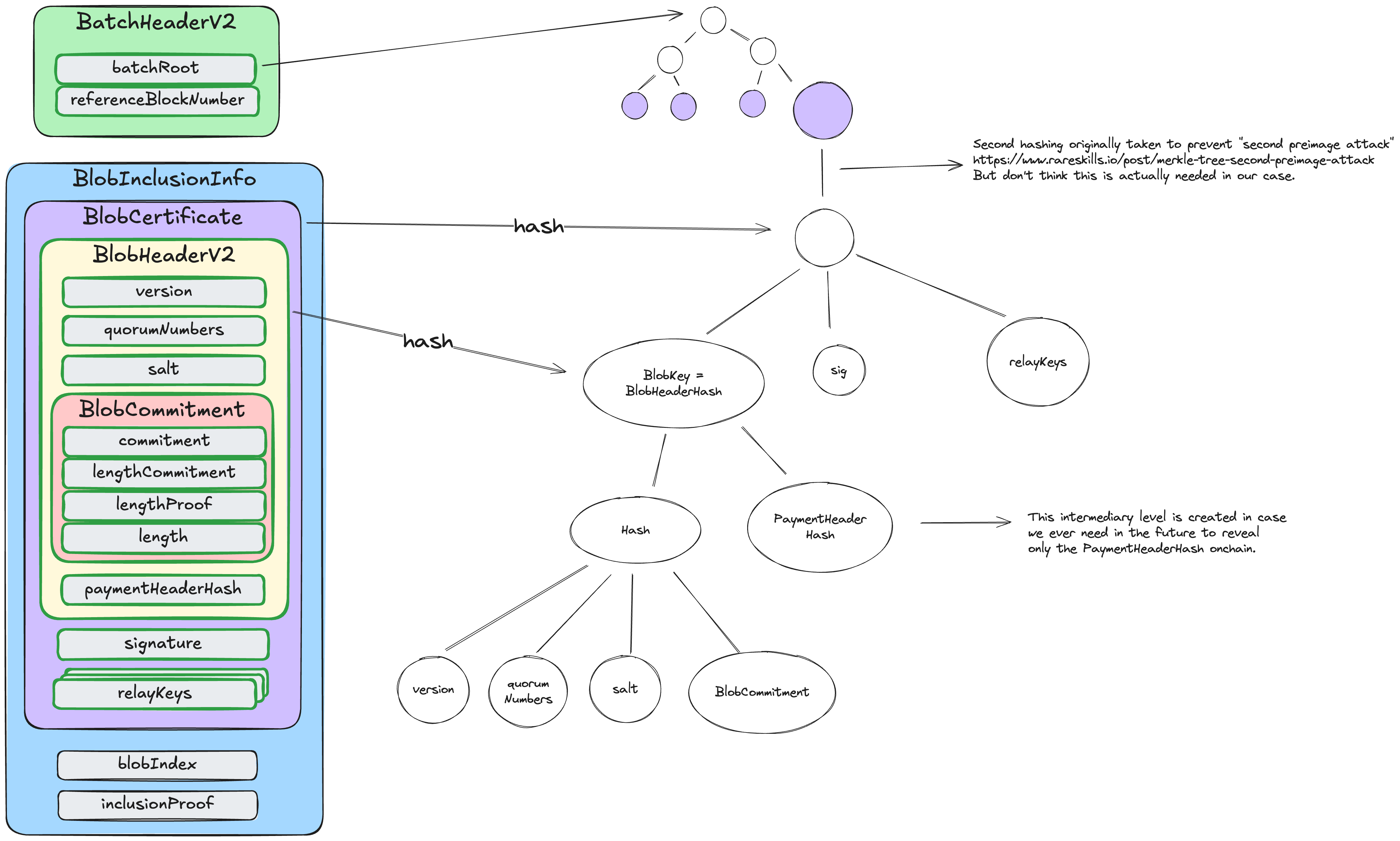

Disperser Receives Blob (W1, W2, W3). The disperser receives a blob consisting of a

BlobHeaderandBlobData. As a precaution, the disperser can validate thePaymentMetadatacontained in theBlobHeaderto ensure that the blob is properly funded, and that the KZG commitments in theBlobHeaderare correct. Note that validators may still reject payment data as invalid even if approved by the disperser, since the disperser lacks knowledge of global payment state (see Payment System for more details). -

Disperser Encodes Blob (W6, W7). The disperser references the Chunk Assignment Logic to translate the

BlobHeaderinto a set ofEncodingParams. The disperser then encodes the blob according to the Encoding Module and theEncodingParamsto produce a collection of encodedChunks. -

Disperser Serves Chunks. The disperser makes the encoded chunks available via the relay's

GetChunksinterface. This is an authenticated and rate-limited interface where each validator can only request its allocated amount of data. -

Disperser Constructs Blob Certificate. The disperser constructs a

BlobCertificateconsisting of theBlobHeaderand aRelayKey, which can be used to identify the relay URI where the associated chunks are available. -

Disperser Constructs Batch Header. The disperser constructs a

BatchHeaderconsisting of a Merkelized collection ofBlobCertificates and aReferenceBlockNumber, which anchors all blobs in the batch to a specific stake distribution on EigenLayer. -

Disperser Sends Batch Header (W9). The disperser sends the

BatchHeaderto the validators using theStoreChunksAPI. -

Validators Validate Batch Header. The validators validate the

PaymentMetadatafor eachBlobHeadercontained in the batch. If any blob contains improper payment information, the batch is rejected. -

Validators Download and Validate Chunks (W10, W11). For properly authorized batches, validators reference the Chunk Assignment Logic together with the

QuorumNumbersof eachBlobHeaderto determine which chunks they are responsible for hosting. Validators request all associated encoded chunks from theGetChunksinterface of the appropriate relays and validate that eachChunkmatches the corresponding blob's KZG commitment using the included opening proof. Validators also validate that each chunk has the correct length using the Chunk Assignment Logic. If any chunk is unavailable or cannot be validated, the batch is rejected. -

Validators Sign Batch Header (W12). For batches that successfully complete validation, each validator signs the batch header using the BLS identity registered in the

EigenDAServiceManagerand returns the signature to the disperser. -

Disperser Aggregates Signatures. The disperser aggregates the BLS signatures from the validators and returns a

Certificatecontaining theBatchHeader, aggregate signature, and inclusion information used for verifying that a blob is part of the batch.

Read

To read a blob, a client follows the sequence below. The labels in parentheses (e.g., R1, R2) correspond to the steps shown in the diagram above.

-

Read from Relay (R1). The client attempts to retrieve the blob from the

GetBlobinterface of the relay(s) identified in theBlobHeader. This is the primary and most efficient retrieval method, as the relay stores complete blobs. -

Read from Validators (R2). If the blob is not available from the relay(s), the client falls back to retrieving individual chunks directly from the validators and reconstructing the blob. The client reconstructs chunk assignments for all validators assigned to the blob and downloads chunks in a random order until it has collected enough unique chunks to reconstruct the blob. Each chunk is validated using the included KZG proofs before the blob is reconstructed using the erasure coding scheme. This approach distributes load evenly across validators and terminates as soon as the minimum number of unique chunks are verified.

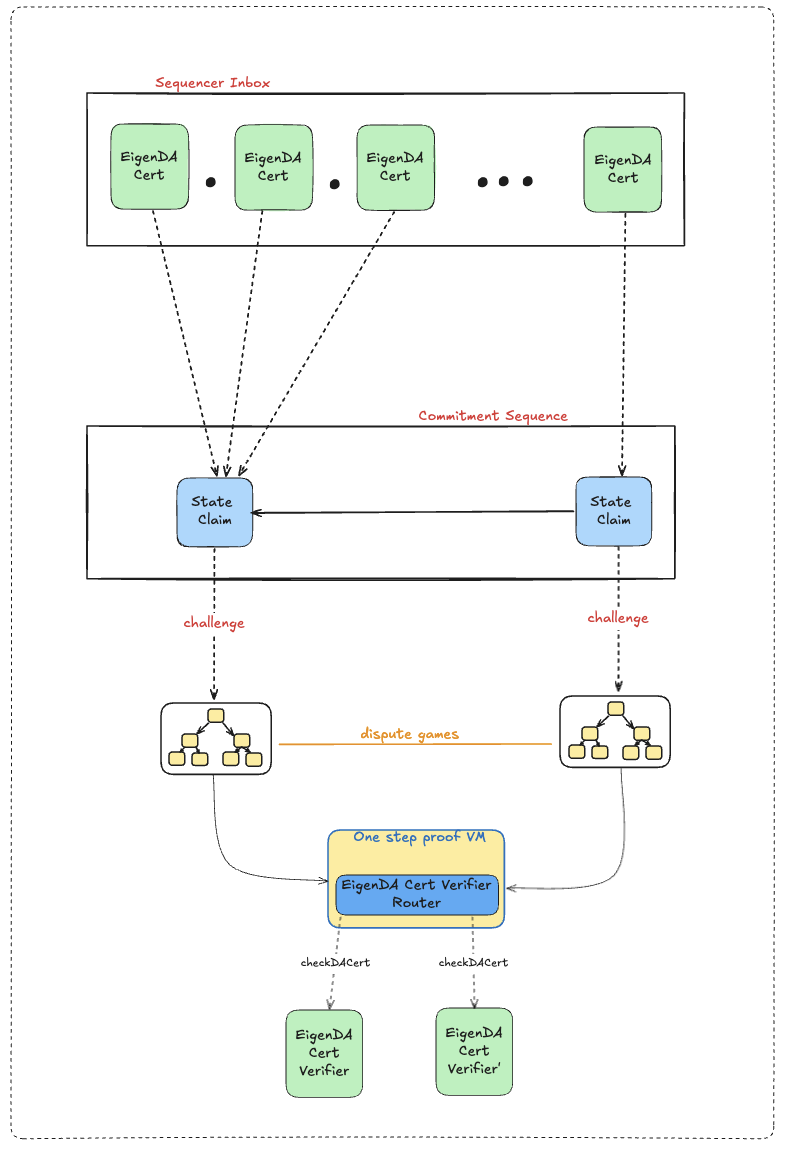

EigenDA Protocol Contracts

This page describes EigenDA contracts that are managed by EigenDA related actors (see the exact roles).

Warning: This page is incomplete and a work in progress as we are undergoing refactors of our contracts as well as some protocol upgrades. The details will change, but the information contained here should at least help to understand the important concepts.

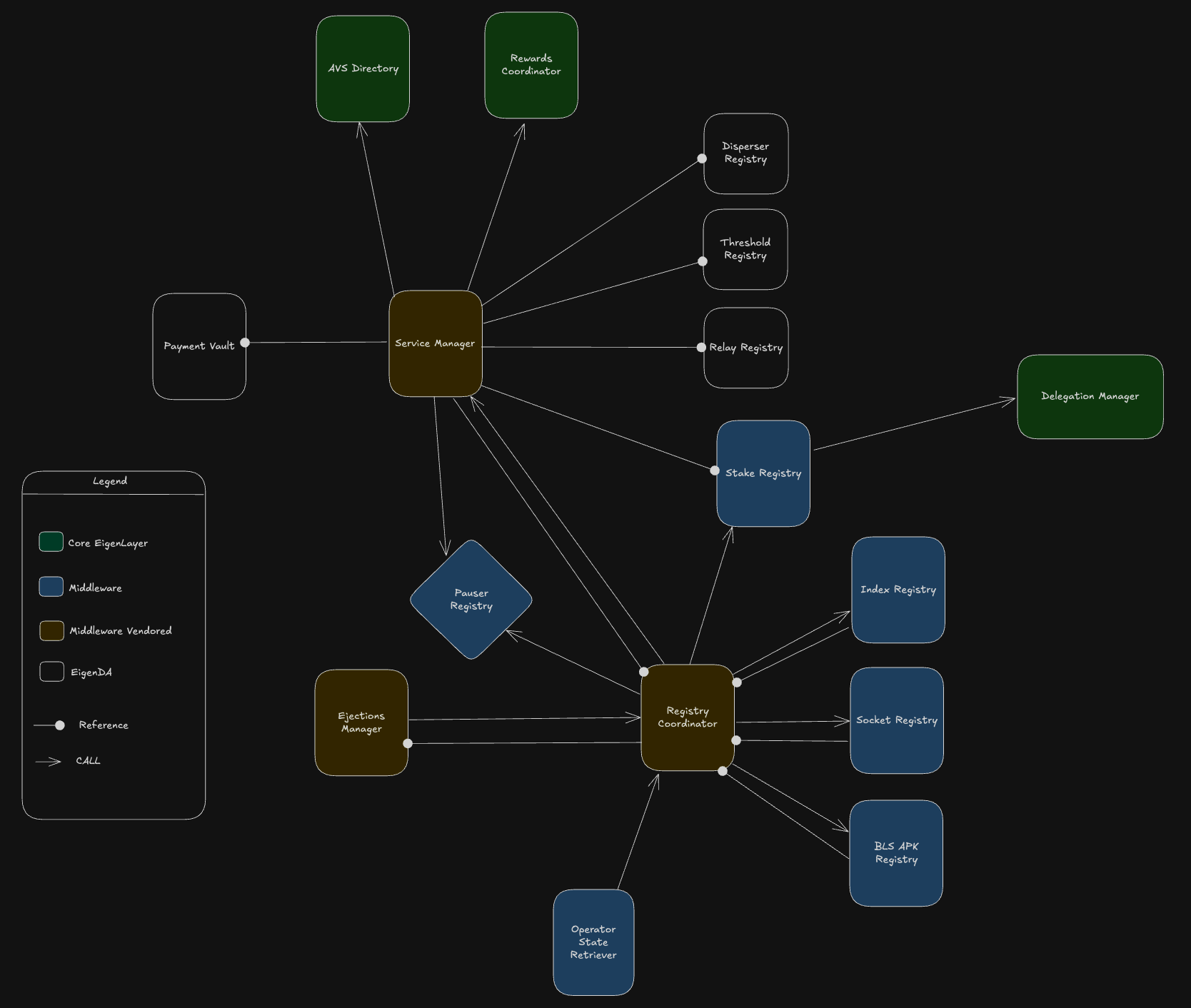

Overview

Middleware Contracts

We make use of eigenlayer-middleware contracts, which are fully documented here and described here. These contracts provide standard interfacing logic for operator state management and AVS representation.

Middleware Vendored Contracts

Some of the middleware contracts (e.g, EjectionsManager, RegistryCoordinator) have been directly vendored into the EigenDA project with minor modifications made.

EigenDA Specific Contracts

The smart contracts can be found in our repo, and the deployment addresses on different chains can be found in the Networks section of our docs.

Integration Contracts

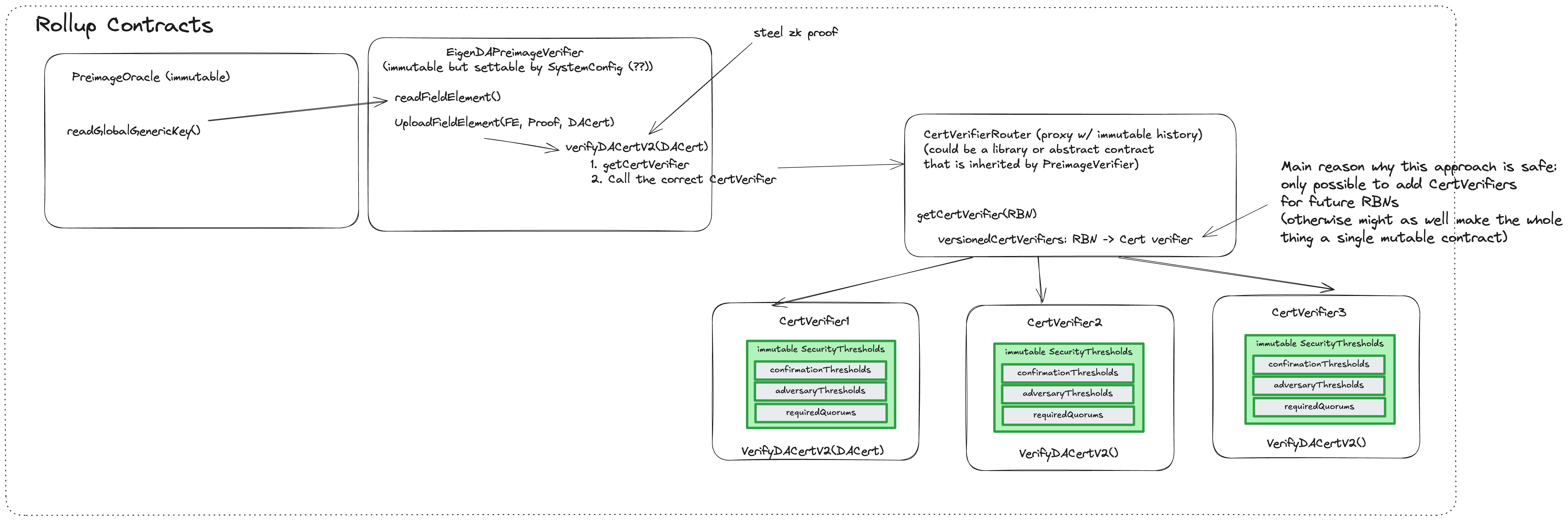

For EigenDA-related contracts that are managed by rollups, see the rollup managed contracts page.

The EigenDA team maintains one customer-facing contract, EigenDACertVerifier. However, using this contract directly is not recommended. The EigenDACertVerifier includes a certVersion parameter that, if upgraded without corresponding updates to a rollup’s offchain code, can lead to liveness outages. Relying on this contract places a rollup’s safety and liveness on EigenDA governance, which is generally discouraged.

Contracts Overview

| Contract Name | Project Category | Deployed Behind ERC1967 Proxy? | Used by Offchain EigenDA Protocol? |

|---|---|---|---|

| EigenDA Directory | eigenda | Yes | Yes |

| Service Manager | eigenda | Yes | Yes |

| Threshold Registry | eigenda | Yes | Yes |

| Relay Registry | eigenda | Yes | Yes |

| Disperser Registry | eigenda | Yes | Yes |

| Payment Vault | eigenda | Yes | Yes |

| Pauser Registry | middleware | No | No |

| BLS APK Registry | middleware | Yes | Yes |

| Index Registry | middleware | Yes | Yes |

| Stake Registry | middleware | Yes | Yes |

| Socket Registry | middleware | Yes | Yes |

| Operator State Retriever | middleware | No | Yes |

| Registry Coordinator | vendored middleware | Yes | Yes |

| Ejections Manager | vendored middleware | Yes | No |

| Cert Verifier Router | integrations | Yes | No |

EigenDADirectory

Description

This contract serves as the central discovery and reference point for all contracts composing the EigenDA system. It implements a lightweight namespace resolution protocol in which human-readable string keys are mapped to EigenDA contract addresses.

Access Mgmt

Ownablerole that can do unilateral entry key modifications

Offchain Usage

This dynamic naming pattern requires off-chain management of canonical contract keys, allowing clients and services to retrieve on-chain system context from a single directory contract reference rather than requiring every contract address to be hard-coded or passed through environment configuration.

EigenDAServiceManager

Description Used for onchain AVS registration with the EigenLayer protocol, EigenDA V1 batching, storing protocol params, rewards distribution, and referencing EigenDA protocol contracts:

- Inherits the

ServiceManagerBasefor operator registration and rewards distribution. - Manages batch settlement roles with callable function (i.e,

confirmBatch) that allows for EigenDA V1 batches to be confirmed and settled into a storage commitment sequence. - Stores protocol params (i.e,

BLOCK_STALE_MEASURE,BLOCK_STORE_DURATION) for offchain ingestion by DA validator nodes. - Stores non-callable references to other EigenDA protocol contracts in storage (i.e,

DisperserRegistry,ThresholdRegistry,RelayRegistry,StakeRegistry,PaymentVault).

Access Mgmt

Pauserrole that can halt EigenDA V1 batch settlementOwnablerole that can modify batch confirmer EOA allow-list, AVS metadata,RewardsClaimee, andRewardsInitiatorRegistryCoordinatorrole that can register/de-register operators through routed calls to theAVSDirectory(i.e,RegistryCoordinator->EigenDAServiceManager->AVSDirectory)RewardsInitiatorrole that can create operator directed and general AVS rewards via routed calls to theRewardsCoordinatorcontract (i.e,RewardsInitiator->EigenDAServiceManager->RewardsCoordinator)

Offchain Usage

TODO

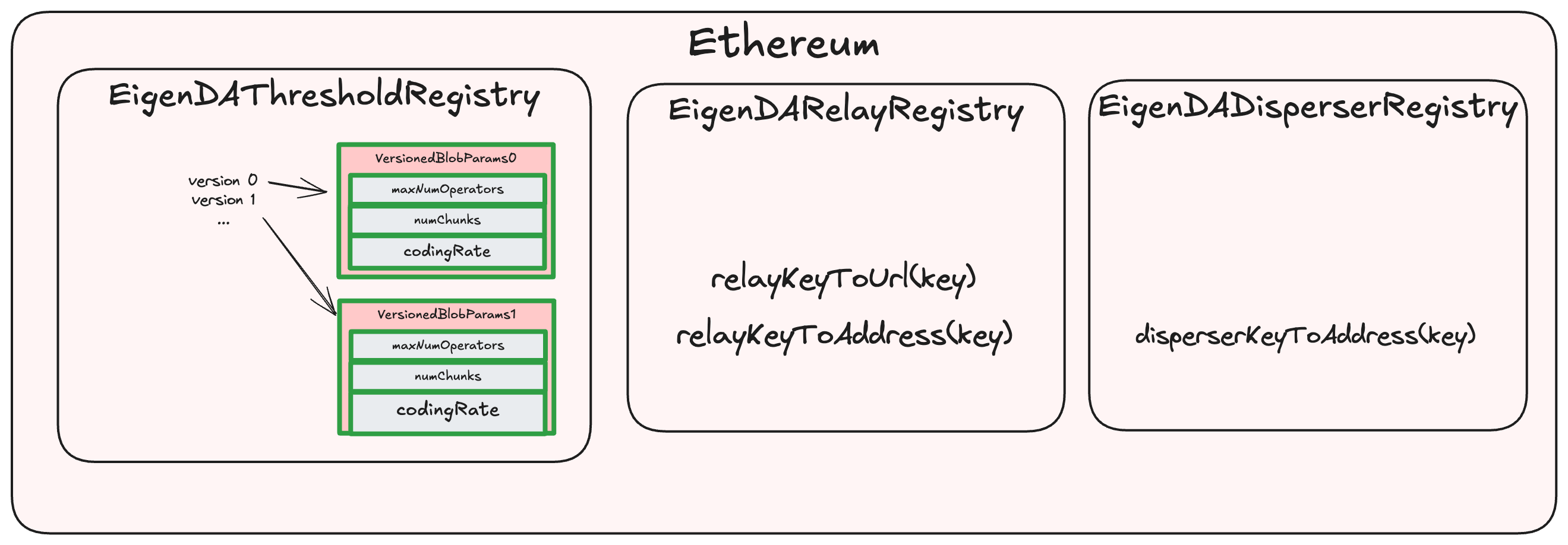

EigenDAThresholdRegistry

Description

The EigenDAThresholdRegistry contains two sets of protocol parameters:

/// @notice mapping of blob version id to the params of the blob version

mapping(uint16 => VersionedBlobParams) public versionedBlobParams;

struct VersionedBlobParams {

uint32 maxNumOperators;

uint32 numChunks;

uint8 codingRate;

}

/// @notice Immutable security thresholds for quorums

SecurityThresholds public defaultSecurityThresholdsV2;

struct SecurityThresholds {

uint8 confirmationThreshold;

uint8 adversaryThreshold;

}

The securityThresholds are currently immutable. Confirmation and adversary thresholds are sometimes also referred to as liveness and safety thresholds:

- Confirmation Threshold (aka liveness threshold): minimum percentage of stake which an attacker must control in order to mount a liveness attack on the system.

- Adversary Threshold (aka safety threshold): total percentage of stake which an attacker must control in order to mount a first-order safety attack on the system.

Their default values are currently set as:

defaultSecurityThresholdsV2 = {

confirmationThreshold = 55,

adversaryThreshold = 33,

}

A new BlobParam version is rarely introduced by the EigenDA Foundation Governance. When dispersing a blob, rollups explicitly specify the version they wish to use. Currently, only version 0 is defined, with the following parameters (reference):

versionedBlobParams[0] = {

maxNumOperators = 3537,

numChunks = 8192,

codingRate = 8,

}

The five parameters are intricately related by this formula which is also verified onchain by the verifyBlobSecurityParams function:

where

EigenDARelayRegistry

Description

Contains EigenDA network registered Relays' Ethereum address and DNS hostname or IP address. BlobCertificates contain relayKeys, which can be transformed into that relay's URL by calling relayKeyToUrl.

Access Mgmt

Ownablerole that can register new relay entries

Offchain Usage

TODO

EigenDADisperserRegistry

Description

Contains EigenDA network registered Dispersers' Ethereum address. The EigenDA Network currently only supports a single Disperser, hosted by EigenLabs. The Disperser's URL is currently static and unchanging, and can be found on our docs site in the Networks section.

Access Mgmt

Ownablerole that can register new dispersers

Offchain Usage

TODO

PaymentVault

Description

Payment contract used to escrow on-demand funds, hold user reservations, and define global payment parameters used by the network (i.e, globalSymbolsPerPeriod, reservationPeriodInterval, globalRatePeriodInterval).

Access Mgmt

Ownablerole that can set payment reservations

Offchain Usage

TODO

PauserRegistry

Description Manages a stateful mapping of pausers that can be arbitrarily added or revoked. This contract is assumed to be deployed immutably. The pauser mapping is checked by caller:

- Mapping checked as prerequisite for pausing batch confirmation logic in

EigenDAServiceManager - Mapping checked as prerequisite for pausing operator state update logic in

RegistryCoordinator

Access Mgmt

Unpauser(or admin) role that can set / remove existing pausers

Offchain Usage

TODO

BLSApkRegistry

Description

This contract stores each operator's BLS public key as well as per quorum aggregate public keys which are only updatable by the RegistryCoordinator.

Access Mgmt

RegistryCoordinatorrole that can invoke aggregate key updates via the registration/de-registration of operators

Offchain Usage

TODO

IndexRegistry

Description Maintains an ordered, historically versioned list of operators for each quorum, allowing the RegistryCoordinator to register or deregister operators while preserving full block-by-block history of operator counts and index assignments. It provides efficient read functions to reconstruct the operator set at any block.

Access Mgmt

RegistryCoordinatorrole that makes stateful updates when registering / deregistering quorum operators

Offchain Usage

TODO

StakeRegistry

Description Stores stake updates bounded by block number and quorum strategy:

struct StakeUpdate {

// the block number at which the stake amounts were updated and stored

uint32 updateBlockNumber;

// the block number at which the *next update* occurred.

/// @notice This entry has the value **0** until another update takes place.

uint32 nextUpdateBlockNumber;

// stake weight for the quorum

uint96 stake;

}

Access Mgmt

Ownablerole that can deploy and modify staking strategiesRegistryCoordinatorrole that makes stateful updates when registering / deregistering quorum operators

Offchain Usage

TODO

SocketRegistry

Description

Stores stateful mapping of operator ID => socket where socket is the operator's DNS hostname.

Access Mgmt

RegistryCoordinatorrole that makes stateful updates when registering / deregistering quorum operators

Offchain Usage

TODO

OperatorStateRetriever

Description

A stateless read-only contract that does exhaustive lookups against the registry coordinator for fetching operator metadata. This bundles stored procedure logic to avoid exhaustive RPC calls made to view functions by offchain EigenDA services.

Access Mgmt

N/A

Offchain Usage

TODO

EigenDARegistryCoordinator

Description

This contract orchestrates operator lifecycle across EigenDA's stake, BLS key, index, and socket registries - handling:

- registration, deregistration

- churning

- stake-updates

- quorum creation/config

- historical quorum-bitmap tracking

Access Mgmt

Pauserrole that can halt operator state updatesOwnablerole that can add new quorums, operator set params, & ejector params / role changesEjectorrole that can invoke an ejection function to forcibly deregister an operator

Offchain Usage

TODO

EigenDAEjectionsManager

Description

Coordinates the lifecycle of ejecting non-responsive operators from EigenDA. It allows an Ejector role to queue and complete ejections. Each queued ejection has a corresponding bond attached by the Ejector where a targeted operator can cancel the ejection by providing a signature before it becomes "confirmable" after a number of DelayBlocks.

Access Mgmt

Ownablerole that can change public parameters (i.e,DelayBlocks,CooldownBlocks)Ejectorrole that can invoke an ejection function to forcibly deregister an operator

Offchain Usage

TODO

CertVerifierRouter

Description

See here.

Access Mgmt

Ownablerole that can add newEigenDACertVerifierentries at new activation block number

Offchain Usage

This dynamic naming pattern requires off-chain management of canonical contract keys, allowing clients and services to retrieve on-chain system context from a single directory contract reference rather than requiring every contract address to be hard-coded or passed through environment configuration.

Governance Roles

There are four key governance roles in the EigenDA contracts seen across network environments (i.e, mainnet, hoodi-testnet, hoodi-preprod, sepolia-testnet):

- ERC1967

ProxyAdminthat can upgrade implementation contracts Ownerthat can perform sensitive stateful operations across protocol contractsPauserthat can halt stateful updates on theServiceManagerandRegistryCoordinatorcontracts. This role is managed by the immutablePauserRegistrycontractEjectorthat can initialize and complete ejection requests via theEjectionsManagercontract

Decentralized Validator Set Governance

Overview

EigenDA's validator set governance manages validator entry and exit in a decentralized way. This document describes the ejection and churning protocols that govern how validators leave and join the EigenDA validator set.

The protocol includes:

- Ejection: dispersers may eject under-performing validators, with validators able to cancel ejections.

- Churner: an on-chain function that removes the validator with the smallest amount of stake to allow a validator to join when the validator set is full.

1. Ejection Protocol

The ejection protocol maintains EigenDA's liveness and quality of service by allowing dispersers to eject honest but under-performing validators.

1.1 Protocol Actors

| Actor | Role | Implementation |

|---|---|---|

| Ejector (Disperser) | Monitors validator performance and initiates ejections | ejector/ |

| Ejectee (Validator) | Monitors ejection attempts and defends against unjust ejections | node/ejection/ejection_sentinel.go |

| Ejection Manager | Smart contract coordinating ejection lifecycle | EigenDAEjectionManager.sol |

1.2 Ejection Initiation

The ejection lifecycle is managed by the BeginEjection() method in ejector/ejection_manager.go:127-193, which performs all pre-flight checks before initiating an on-chain ejection.

1.2.1 Ejector Authorization

Only authorized dispersers can initiate ejections. Authorized disperser addresses are stored in an allow-list within the EigenDAEjectionManager contract. Initially, this list contains only the EigenDA disperser operated by EigenLabs. The list can be expanded as additional dispersers become available.

Implementation: The contract enforces this via the onlyEjector modifier (EigenDAEjectionManager.sol:66-69), which checks the EJECTOR_ROLE using AccessControl.

1.2.2 Automatic Ejection Decision-Making

The disperser monitors validator performance over a configurable time window (performance_evaluation_window, default: 10 minutes) and computes each validator's signing_rate.

A validator becomes eligible for ejection only when all of the following conditions are met:

- Zero signing rate: The validator's

signing_rateis zero over the evaluation window - Cool-down period elapsed:

DISPERSER_COOL_DOWNhas passed since the last ejection attempt against this validator - Selective non-participation: Other validators show non-zero signing rates during the same period

These rules prevent ejections during network-wide outages and limit wasted transaction fees when dealing with potentially malicious validators who repeatedly cancel ejections while being under-performing.

Implementation: The evaluation logic is in ejector/ejector.go:102-184. The ejection criterion is implemented as:

// ejector/ejector.go:146

isEjectable := signingRate.GetSignedBatches() == 0 && signingRate.GetUnsignedBatches() > 0

This ensures a validator is only ejectable if they signed zero batches but there were batches to sign (selective non-participation). The evaluation window is configured via EjectionCriteriaTimeWindow in ejector/ejector_config.go:41-45.

1.2.3 Non-Ejection List

The disperser maintains a non-ejection list to handle validators that repeatedly cancel ejections without actually performing their duties. When a validator's failed ejection attempts reach MAX_FAILURE_TIMES, they are added to this list and automatic ejection stops. Human intervention is then required to deal with these validators. This list can also be manually configured.

Implementation: The non-ejection list (called ejectionBlacklist) is maintained in ejector/ejection_manager.go:54-60. Failed attempts are tracked in the failedEjectionAttempts map (lines 68-72), and validators are added to the blacklist in handleAbortedEjection (lines 384-412). The threshold is configured via MaxConsecutiveFailedEjectionAttempts in ejector/ejector_config.go:53-54, with a default value of 5.

1.2.4 Manual Ejection

In addition to automatic ejection based on performance monitoring, dispersers can manually initiate ejections against specific validators.

1.3 Ejection Logic in the Smart Contract

The EigenDAEjectionManager contract enforces the following constraints before accepting an ejection request:

- Rate Limiting: At least

EJECTION_COOL_DOWN(30 minutes) must have passed since the previous ejection attempt against the same validator - Concurrency Control: At most one active ejection is allowed per validator at any given time

Upon accepting a valid ejection request, the contract:

- Records the ejection in contract storage

- Starts a cancellation window of duration

RESPONSE_TIME(30 minutes) - Emits an ejection event that validators monitor

Implementation: The constraint checks are enforced in EigenDAEjectionLib.sol:

// EigenDAEjectionLib.sol:36-42

require(ejectee.record.proceedingTime == 0, "Ejection already in progress");

require(ejectee.lastProceedingInitiated + s().cooldown <= block.timestamp, "Ejection cooldown not met");

ejectee.record.ejector = ejector;

ejectee.record.quorums = quorums;

ejectee.record.proceedingTime = uint64(block.timestamp) + s().delay;

ejectee.lastProceedingInitiated = uint64(block.timestamp);

The first require enforces concurrency control (one ejection per validator), the second enforces the cooldown period, and the delay parameter sets the cancellation window duration.

1.4 Validator Defense (Cancellation)

1.4.1 Ejection Monitoring

Each validator node runs an ejection sentinel (node/ejection/ejection_sentinel.go) that continuously monitors the EigenDAEjectionManager contract for ejection events targeting that validator.

1.4.2 Cancellation Modes

Validators operate in one of two modes, configurable via a trusted dispersers list:

| Mode | Condition | Behavior |

|---|---|---|

| Mode 1 | Ejector is in trusted dispersers list | No cancellation sent (validator trusts ejector's judgment) |

| Mode 2 | Ejector is not in trusted dispersers list | Cancel if validator is online and running compliant software version |

Default Configuration: The trusted dispersers list is empty by default, meaning validators operate in Mode 2 for all ejectors.

Note: Validators must configure a wallet to submit cancellation transactions. Until most validators have set up their cancellation infrastructure, only the EigenDA disperser will be authorized as a valid ejector.

1.4.3 Cancellation Process

To cancel an ejection, the validator:

-

Generates cancellation message containing:

- Chain ID (identifying which L1 blockchain)

- Validator's address

- Block height at which the ejection was initiated

-

Signs the message using the validator's BLS private key

-

Submits transaction to

EigenDAEjectionManagercontaining the signed cancellation message

If the cancellation is received within the RESPONSE_TIME window and the signature is valid, the ejection is canceled and the validator remains in the validator set.

1.5 Ejection Finalization

If no valid cancellation is received before the RESPONSE_TIME window expires, any disperser can finalize the ejection by submitting a finalizing transaction to the contract. Upon finalization, the validator is deregistered from the EigenDA validator set via a call to EigenDARegistryCoordinator.

1.6 Rejoining After Ejection

Validators that have been ejected are subject to a cool-down period of 1 day before they can rejoin the validator set.

1.7 Protocol Parameters

| Parameter | Value | Description | Implementation |

|---|---|---|---|

RESPONSE_TIME | 30 minutes | Cancellation window duration | delay in EigenDAEjectionStorage.sol:40-42 |

EJECTION_COOL_DOWN | 30 minutes | Minimum time between ejection attempts for same validator | cooldown in EigenDAEjectionStorage.sol:40-42 |

DISPERSER_COOL_DOWN | 24 hours (default) | Cool-down before retrying ejection after failed attempt | EjectionRetryDelay in ejector/ejector_config.go:50-51 |

MAX_FAILURE_TIMES | 5 (default) | Failed ejection attempts before adding to non-ejection list | MaxConsecutiveFailedEjectionAttempts in ejector/ejector_config.go:53-54 |

performance_evaluation_window | 10 minutes (default) | Time window for computing signing rate | EjectionCriteriaTimeWindow in ejector/ejector_config.go:41-45 |

| Rejoin cool-down | 1 day | Wait time before ejected validator can rejoin | (Contract-level parameter) |

1.9 Implementation References

| Component | Path |

|---|---|

| Ejector service | ejector/ |

| Ejection sentinel | node/ejection/ejection_sentinel.go |

| Ejection manager contract | contracts/src/periphery/ejection/EigenDAEjectionManager.sol |

| Ejection library | contracts/src/periphery/ejection/libraries/EigenDAEjectionLib.sol |

| Ejection types | contracts/src/periphery/ejection/libraries/EigenDAEjectionTypes.sol |

| Ejection storage | contracts/src/periphery/ejection/libraries/EigenDAEjectionStorage.sol |

2. Churning Protocol

The churning protocol governs how new validators join the EigenDA validator set when the maximum validator capacity has been reached. The churning logic is computed entirely on-chain.

2.1 Overview

When the validator set is at maximum capacity, a new validator can only join by "churning out" an existing validator with the smallest stake. The smart contract automatically identifies and ejects the smallest-stake validator to make room for the higher-stake incoming validator.

2.2 On-Chain Churn Selection

The EigenDARegistryCoordinator contract implements the churn selection logic:

- A new validator attempts to register and the validator set is at capacity

- The contract iterates through all current validators in the set and identifies the validator with the smallest stake

- Automatically deregisters the smallest-stake validator

- Registers the new validator

Implementation: The main registration logic is in registerOperator() (EigenDARegistryCoordinator.sol:108-142), which checks if the operator count exceeds maxOperatorCount and calls _churnOperator(). The _churnOperator() function performs an exhaustive search:

// EigenDARegistryCoordinator.sol:157-178

function _churnOperator(uint8 quorumNumber) internal {

bytes32[] memory operatorList = indexRegistry().getOperatorListAtBlockNumber(quorumNumber, uint32(block.number));

require(operatorList.length > 0, "RegCoord._churnOperator: no operators to churn");

// Find the operator with the lowest stake

bytes32 operatorToChurn;

uint96 lowestStake = type(uint96).max;

for (uint256 i; i < operatorList.length; i++) {

uint96 operatorStake = stakeRegistry().getCurrentStake(operatorList[i], quorumNumber);

if (operatorStake < lowestStake) {

lowestStake = operatorStake;

operatorToChurn = operatorList[i];

}

}

// Deregister the operator with the lowest stake

bytes memory quorumNumbers = new bytes(1);

quorumNumbers[0] = bytes1(uint8(quorumNumber));

_deregisterOperator({operator: blsApkRegistry().pubkeyHashToOperator(operatorToChurn), quorumNumbers: quorumNumbers});

}

This iterates through all operators to find the one with minimum stake and automatically deregisters them.

EigenDA Payment System

1. Overview

The EigenDA payment system allows users to pay for blob dispersals through two methods: reservations and on-demand

payments. All payment logic is implemented in the core/payments package.

Key Concepts:

- Blob sizes are measured in symbols, where each symbol is 32 bytes.

- Blob sizes are measured post-blob encoding.

- Blob sizes are constrained to powers-of-two: dispersals are rounded up to the next power-of-two number of symbols when computing size.

- The PaymentVault contract

stores all on-chain payment-related data:

- User reservation parameters

- User on-demand deposits

- Global payment parameters, including but not limited to:

minNumSymbols: dispersals smaller than this threshold are billed as if they wereminNumSymbolsin sizepricePerSymbol: the price per symbol (in wei) for on-demand payments

2. Payment Methods

2.1 Reservation Payments

- Reservations provide guaranteed bandwidth for a specified time period.

- Users reserve capacity in advance, and must "use it or lose it".

- Reservations are procured out-of-band, through Eigen Labs.

- The system uses a leaky bucket algorithm to manage usage:

symbols are added to the bucket each time a blob is dispersed, and these leak out over time. A user can only make a

dispersal if the leaky bucket has available capacity.

- The total capacity of the leaky bucket is parameterized by reservation rate and duration. The size of the

bucket in symbols is

reservationRate * bucketDuration. This calculation controls the burstiness of reservation usage.

- The total capacity of the leaky bucket is parameterized by reservation rate and duration. The size of the

bucket in symbols is

- Parameters describing active user reservations are kept in the

PaymentVaultcontractsymbolsPerSecond: the reservation bandwidth ratestartTimestampandendTimestamp: define when the reservation is activequorumNumbers: which quorums the reservation can be used for

2.1.1 Source of Truth

- Validator nodes are the source of truth for reservation usage.

- Each validator keeps track of the dispersals from each user account, and will reject dispersals if the user doesn't have enough capacity.

- Clients keep a local reckoning of their own reservation usage, so that they can stay within the bounds of their reserved bandwidth.

- Dispersers also keep a local reckoning of client reservation usage, but a malicious client can bypass this check by intentionally dispersing too much data spread out over multiple dispersers. From the perspective of any given disperser, the client is within reservation limits. But in total, the client is over the limit. This isn't a problem, because validator nodes will catch the misbehavior. By having dispersers keep track of reservation usage, we are imposing a limit on how severely a client can misbehave in this way: in a system with N dispersers, a malicious client can disperse at most N * reservation rate.

- Reservation usage state agreement: since clients keep a local reckoning of reservation usage without any input from

validators, it's all but guaranteed that their local state will differ (at least slightly) from the state on any given

validator. This actually doesn't present a problem, so long as these key invariants are maintained:

- A client behaving honestly must be able to disperse blobs without payment failures.

- The amount of "free" dispersals that can be stolen by a dishonest client must be tightly limited.

2.1.2 Bucket Capacity Configuration

- We can achieve these invariants by using buckets of differing sizes between clients and validator nodes. If we make validator buckets larger than client buckets by some multiple, then slight discrepancies between client and validator are naturally smoothed out.

- If a dishonest client tries to disperse more data than allowed, the behavior will be permitted by validators for a short time, but eventually even the larger validator bucket will fill. At that point, validators will limit new dispersals from the dishonest client to the rate of the reservation, and no additional dispersals may be stolen.

- The capacity difference between client and validator buckets must be chosen to accommodate the maximum

expected latency of the system. Specifically:

validatorBucketCapacity - clientBucketCapacity = reservationRate * maxSystemLatency - This ensures that honest clients operating at full capacity won't be rejected due to timing discrepancies.

- Current bucket size configuration:

- Client buckets use a duration of 1 minute.

- Disperser buckets use a duration of 1.5 minutes.

- Validator buckets use a duration of 2 minutes, accommodating up to 1 minute of system latency.

2.1.3 Leaky Bucket Overfill

The reservation leaky bucket implementation permits clients to overfill their buckets, with certain constraints:

- If a client has any available capacity in their bucket, they may make a single dispersal up to the maximum blob size, even if that dispersal causes the bucket to exceed its maximum capacity.

- When this happens, the bucket level actually goes above the maximum capacity, and the client must wait for the bucket to leak back down below full capacity before making the next dispersal.

- This feature exists to solve a problem with small reservations: without overfill, a reservation might be so small that its total bucket capacity is less than the max blob size, which would prevent the user from dispersing blobs up to max size.

- By permitting a single overfill, even the smallest reservation can disperse blobs of maximum size.

2.1.4 Reservation Usage Persistence

The leaky bucket algorithm does not require persisting reservation usage state across system restarts. Different system components initialize their buckets with opposing biases to maintain system integrity without persistence:

Client Initialization (Conservative Bias)

- Clients initialize their leaky bucket as completely full (no capacity available) upon restart.

- They must wait for symbols to leak out before dispersing, guaranteeing compliance with reservation rate limits.

- While this may result in slight underutilization if usage was low before restart, it prevents violation of reservation limits.

Validator Initialization (Permissive Bias)

- Validators initialize leaky buckets as completely empty (full capacity available) upon restart.

- This ensures they never incorrectly deny service to users entitled to a reservation.